In this notebook I want to reproduce in PyMC the methodology described in the amazing blog post Modeling Short Time Series with Prior Knowledge by Tim Radtke to forecast short time series using bayesian transfer learning 🚀. The main idea is to transfer information (e.g. long term seasonality) from a long time series to a short time series via prior distributions. Tim’s blog post treats a very concrete example where all the concepts become very concrete. The challenge of the example is to generate long term forecast for a short time series of bike sales data. Specifically, the input sales data consists of three months of daily data and the objective is to generate at least a two years forecast. In general this is very hard to to with commonly available methods (as we will show below) due the fact we do not have enough historical data to capture seasonal patterns. For this concrete example, we do expect to have a strong yearly seasonal pattern as bike sales are usually much higher during summer than in winter. Hence, we could use temperature as a proxy for this seasonal pattern. However, as mentioned above, we can not simply try to use such data in a model with just 3 months of daily data … ¯\(ツ)/¯ …

💡Here s the elegant trick: First, fit a model on long past historical temperature data through Fourier modes (as in Facebook’s Prophet model). Then use the posterior means and standard deviations of each Fourier mode as the prior distribution for the sales short time series \(y_t\). For the later model we use a negative binomial distribution as the likelihood function (as we are modeling count data, i.e. the number of sales) and model the mean \(\mu_t\) through three main components:

- A seasonal component which consists of Fourier modes with the specified priors, dummy variables to model the weekly seasonality and a mild trend component.

- We use an autoregressive term \(\mu_{t -1}\) on the mean itself.

- An autoregressive term \(y_{t - 1}\) from the input sales data.

By combining the the long term seasonality learned in the temperature model in this way we can adapt it to match the scale of the sales data. We will describe the full model specification below.

I was fortunate to see Tim’s talk about the subject back in 2019 during the satRdays Berlin conference (where I also gave a talk on Remedies for Severe Class Imbalance). I remember listening to such great presentation ant thinking Wow! This is a fantastic idea! At that time I was not able to follow the modeling strategy 100%, but I kept the idea in mind. Since then, I have been faced a couple of times to the challenge of forecasting short time series where there is an expected seasonal pattern but not enough historical data. Of course, when there are many time series, the problem can be tackled using probabilistic forecasting as well (see for example deepAR). However, the strategy presented on Tim’s blog post is very elegant and can serve situations where the number of time series is small (which is often the case in certain domains, e.g. marketing). Hence, I decided to try it out in PyMC! Moreover, while trying to reproduce this work I also learned some very useful things on the side:

The particular models (which we describe below) to perform the transfer learning are very interesting (specially the local level model) and I can see similar variations being applicable in many real world problems.

Learn to read Stan code and understand how it works. This is because the code is provided in R and the model is sampled using Stan. Please find the code of the blog post here (kudos to Tim for sharing it and making the results fully reproducible!).

Learn how to use

aesara.scanto run loops efficiently inside a PyMC model. I must admit that learning howaesara.scanworks was not very easy and I really had to work a concrete example and carefully read documentation to get it work. I still need some more practice but I have a much better intuition now. I share some findings and extended explanations on an appendix at the end of this notebook.

I strongly recommend to read Tim’s original blog post Modeling Short Time Series with Prior Knowledge first to enjoy a clear understanding of the problem and the underlying ideas. The purpose of this notebook is mainly to reproduce his results (and the details behind the PyMC code) and not to give a detailed explanation of the model, specially the remarkable prior distributions analysis done by Tim. In addition, the original post was motivated by the short post Fitting models to short time series by Rob J Hyndman.

For the experienced readers: if you have know a better way to re-write or optimize this PyMC implementation I would love to hear about it. The time it takes to sample is comparable to the time it takes to run the Stan code through R.

Data Sources:

Sales Data: Publicly available Citi Bike data from station 360 in New York City, see here.

Temperature Data: Available on Kaggle, see here.

Prepare Notebook

import pytensor

import pytensor.tensor as pt

import arviz as az

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import pymc as pm

import seaborn as sns

plt.style.use("bmh")

plt.rcParams["figure.figsize"] = [12, 6]

plt.rcParams["figure.dpi"] = 100

plt.rcParams["figure.facecolor"] = "white"

%load_ext rich

%load_ext autoreload

%autoreload 2

%config InlineBackend.figure_format = "retina"Read Data

We get the data from the original blog post repository github.com/timradtke/short-time-series. For the purpose of this notebook, I already combined (outer join on date) the sales and temperature data.

raw_df = pd.read_csv("../data/sales.csv", parse_dates=["date"])

raw_df.head()

| date | sales | temp | |

|---|---|---|---|

| 0 | 2013-07-01 | 57.0 | 303.0 |

| 1 | 2013-07-02 | 58.0 | 298.0 |

| 2 | 2013-07-03 | 53.0 | 301.0 |

| 3 | 2013-07-04 | 36.0 | 302.0 |

| 4 | 2013-07-05 | 29.0 | 305.0 |

EDA

Let us start by looking into the data.

fig, ax = plt.subplots(

nrows=2, ncols=1, figsize=(10, 6), sharex=True, sharey=False, layout="constrained"

)

sns.lineplot(x="date", y="sales", data=raw_df, color="black", ax=ax[0])

sns.lineplot(x="date", y="temp", data=raw_df, color="C0", ax=ax[1])

fig.suptitle("Raw Data", fontsize=16)

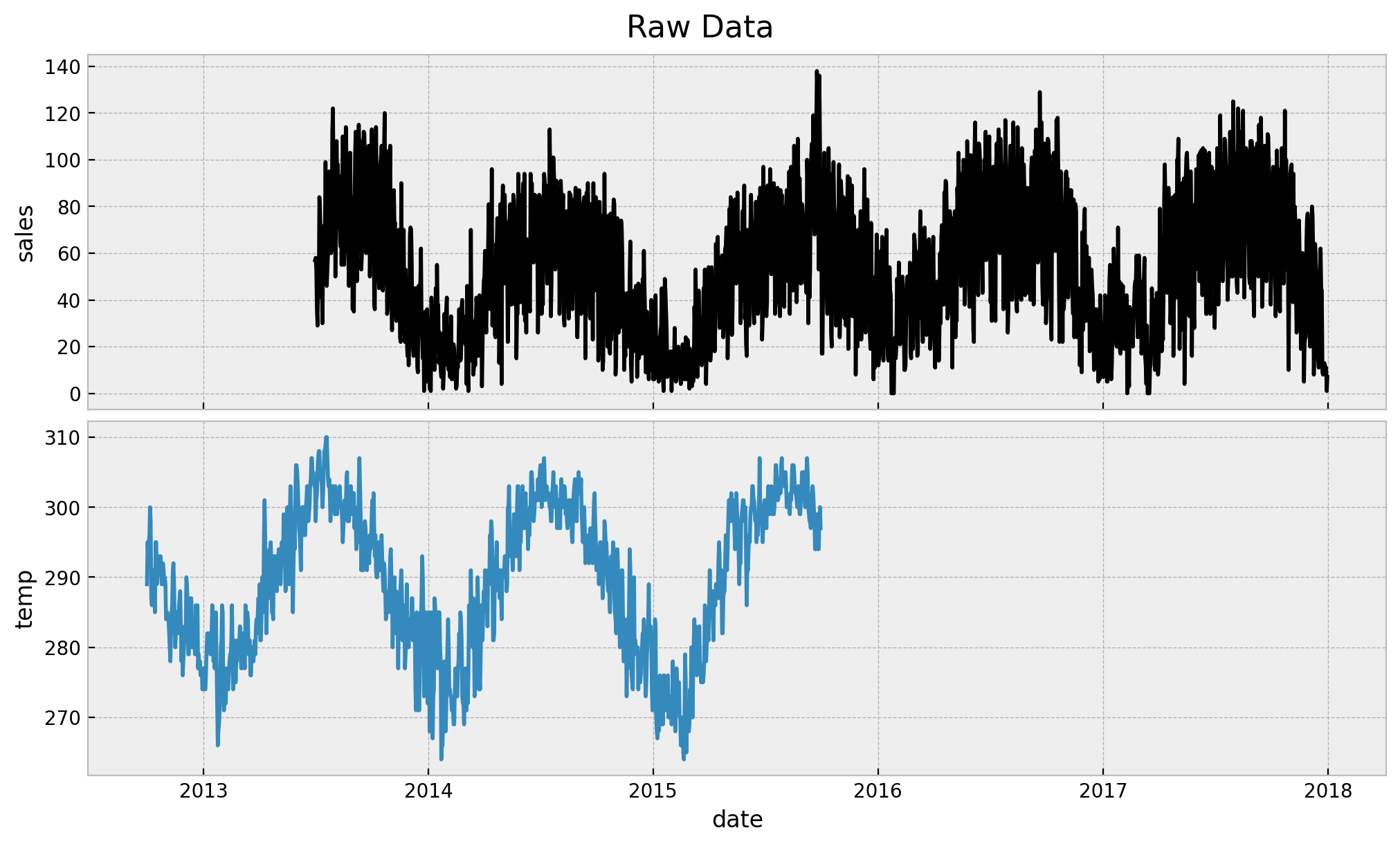

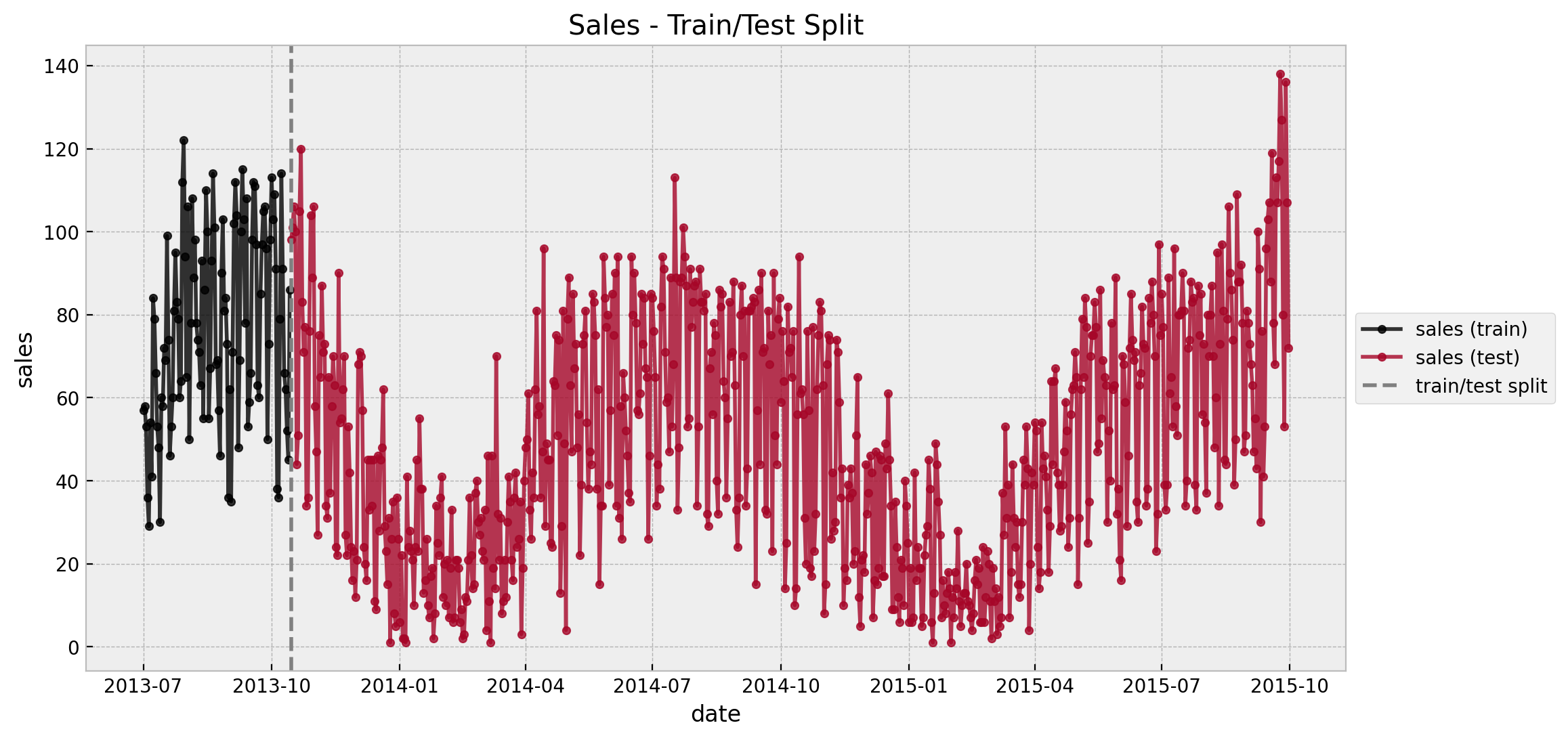

The first thing we observe is that we long data for both sales and temperature. Note however that our assumption is that we just have 3 months of historical sales data. The rest of the sales data is used as a “test set”. On the other hand we do use all the available temperature data since the main idea s to have a predictor which is available in the future at prediction time (this could be the temperature forecast in real cases). Next, let us look at the sales data when both of them are not null:

mask = "sales.notnull() and temp.notnull()"

fig, ax = plt.subplots(

nrows=2, ncols=1, figsize=(10, 6), sharex=True, sharey=False, layout="constrained"

)

sns.lineplot(x="date", y="sales", data=raw_df.query(mask), color="black", ax=ax[0])

sns.lineplot(x="date", y="temp", data=raw_df.query(mask), color="C0", ax=ax[1])

ax[0].set(title="Sales")

ax[1].set(title="Temperature")

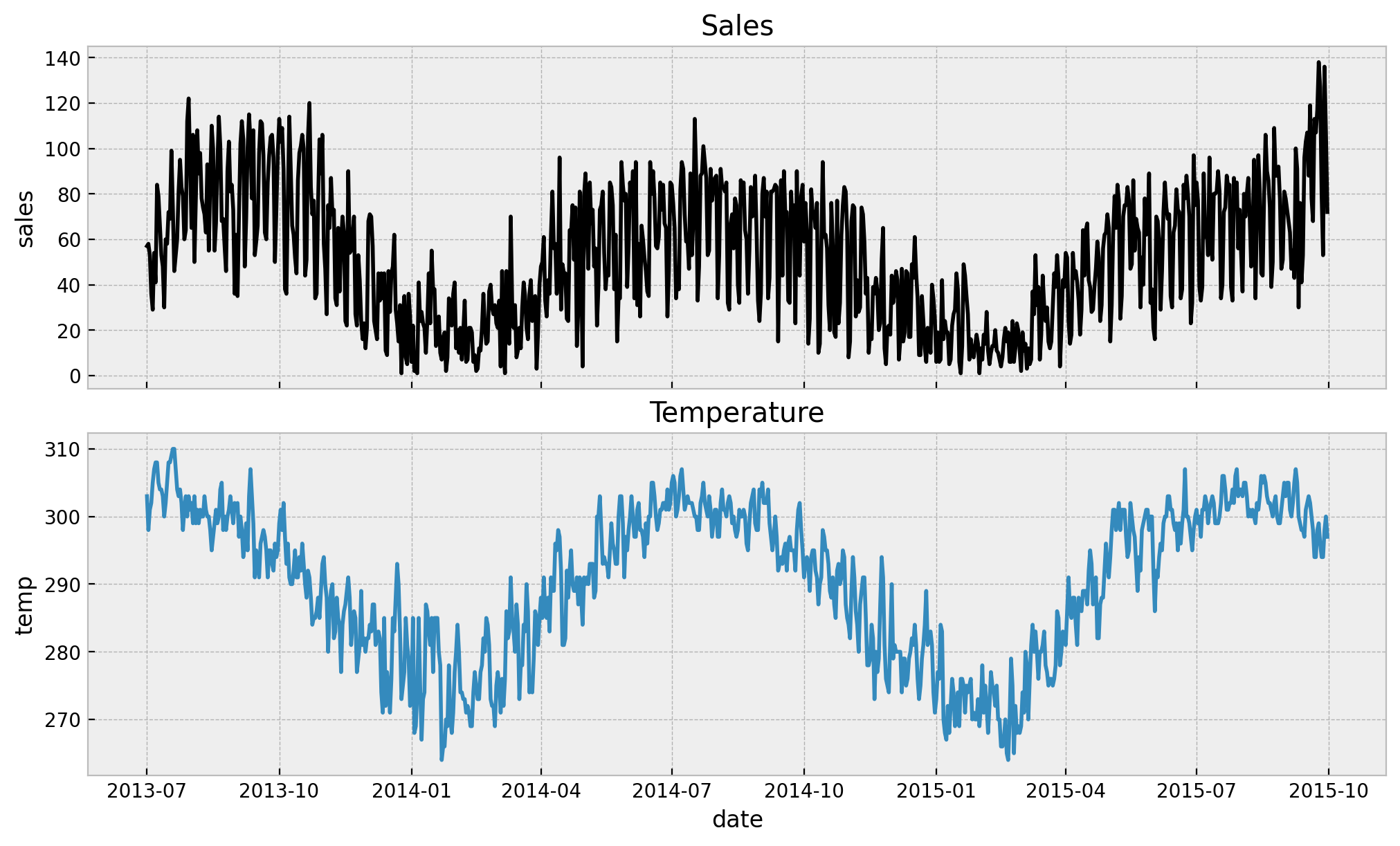

We see a strong yearly seasonal pattern both variables. Now we move intro the data preparation step.

Data Preparation

Let us set the start and end date of the training set for the sales model.

start_date = pd.to_datetime("2013-07-01")

train_test_date = pd.to_datetime("'2013-10-15'")

print(f"Training Set Length: {(train_test_date - start_date).days} days")

Training Set Length: 106 daysNow we split the data accordingly. In the process we also add two features: 1. We scale the temperature data 2. We create a trend component.

Please refer to the original blog post for a detailed explanation behind the motivation of these transformations. In a nutshell, we scale the temperature data as we are interested in relative values (so we subtract the minimum) and the trend is something we expect to be present in the long ter forecast, see below (again, the scales is not important).

# data points before the start of the training set

n_init = raw_df.sort_values("date").query("date < '2013-07-01'").shape[0]

df = (

raw_df.query("temp.notnull()")

.sort_values("date")

.reset_index(drop=True)

.eval(

"""

temp_scaled = temp - temp.min()

trend = (sales.index - @n_init + 1) / 365.25

""",

engine="python",

)

)

df_train = df.query("date < @train_test_date & sales.notnull()")

df_test = df.query("date >= @train_test_date")

n_train = df_train.shape[0]

n_test = df_test.shape[0]

n = n_test + n_trainLet’s visualize the sales model training set.

fig, ax = plt.subplots()

sns.lineplot(

x="date",

y="sales",

data=df_train,

color="black",

marker="o",

markersize=4,

markeredgecolor="black",

ax=ax,

)

ax.set(title="Sales (Training Set)")

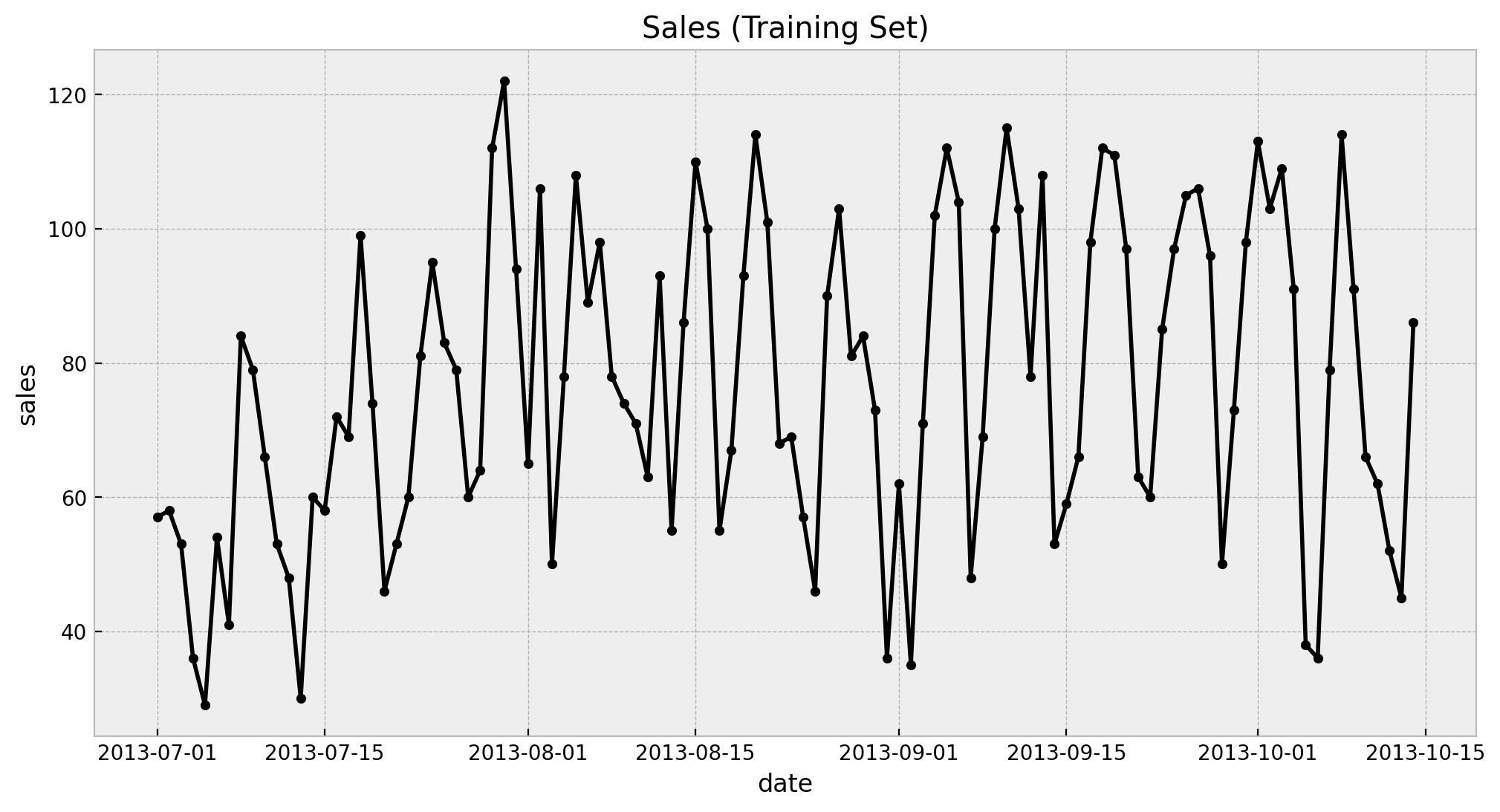

We see a clearly weekly pattern and a mild positive trend. Finally we plot the train and test split.

fig, ax = plt.subplots()

sns.lineplot(

x="date",

y="sales",

data=df_train,

marker="o",

color="black",

alpha=0.8,

markersize=4,

markeredgecolor="black",

label="sales (train)",

ax=ax,

)

sns.lineplot(

x="date",

y="sales",

data=df_test,

marker="o",

color="C1",

alpha=0.8,

markersize=4,

markeredgecolor="C1",

label="sales (test)",

ax=ax,

)

ax.axvline(x=train_test_date, color="gray", linestyle="--", label="train/test split")

ax.legend(loc="center left", bbox_to_anchor=(1, 0.5))

ax.set(title="Sales - Train/Test Split")

Temperature Model

Now that we are more familiar with the data we proceed to build the temperature model as described in the original blog post: We fit a Poisson regression (temperature is in Fahrenheit, so are integers) where the mean is modeled using Fourier modes and and intercept. Note we use the complete set of available temperature values. Mathematically,

\[\begin{align*} \text{temp\_scaled}_{t} & \sim \text{Poisson}(\mu_{t}) \\ \log(\mu_{t}) & = a + \sum_{k=1}^{K=6} b_{k}\sin\left(\frac{2\pi k t}{m}\right) + \tilde{b}_{k}\cos\left(\frac{2\pi k t}{m}\right), \quad m = 365.25 \\ a & \sim \text{Normal}(0, 1) \\ b_{k}, \tilde{b}_{k} & \sim \text{Normal}(0, 1) \end{align*}\]

# We extract useful features.

date = df["date"]

temp_scaled = df["temp_scaled"]

trend = df["trend"]

sales = df["sales"]

# We extract the day of week for the sales model below.

dayofweek_idx, dayofweek = df["date"].dt.dayofweek.factorize()

We generate the Fourier modes as described in Air passengers - Prophet-like model.

periods = df["date"].dt.dayofyear / 365.25

n_order = 6

fourier_features = pd.DataFrame(

{

f"{func}_order_{order}": getattr(np, func)(2 * np.pi * periods * order)

for order in range(1, n_order + 1)

for func in ("sin", "cos")

}

)

Now we are ready to define the model.

coords = {

"date": date,

"fourier_features": np.arange(2 * n_order),

}

with pm.Model(coords=coords) as temp_model:

# --- priors ---

## intercept

a = pm.Normal(name="a", mu=0, sigma=1)

## seasonality

b_fourier = pm.Normal(name="b_fourier", mu=0, sigma=1, dims="fourier_features")

# --- model parametrization ---

seasonality = pm.Deterministic(

"seasonality", pm.math.dot(b_fourier, fourier_features.T), dims="date"

)

mu = pm.Deterministic(name="mu", var=a + seasonality, dims="date")

# --- likelihood ---

pm.Poisson("likelihood", mu=pm.math.exp(mu), observed=temp_scaled, dims="date")

pm.model_to_graphviz(temp_model)

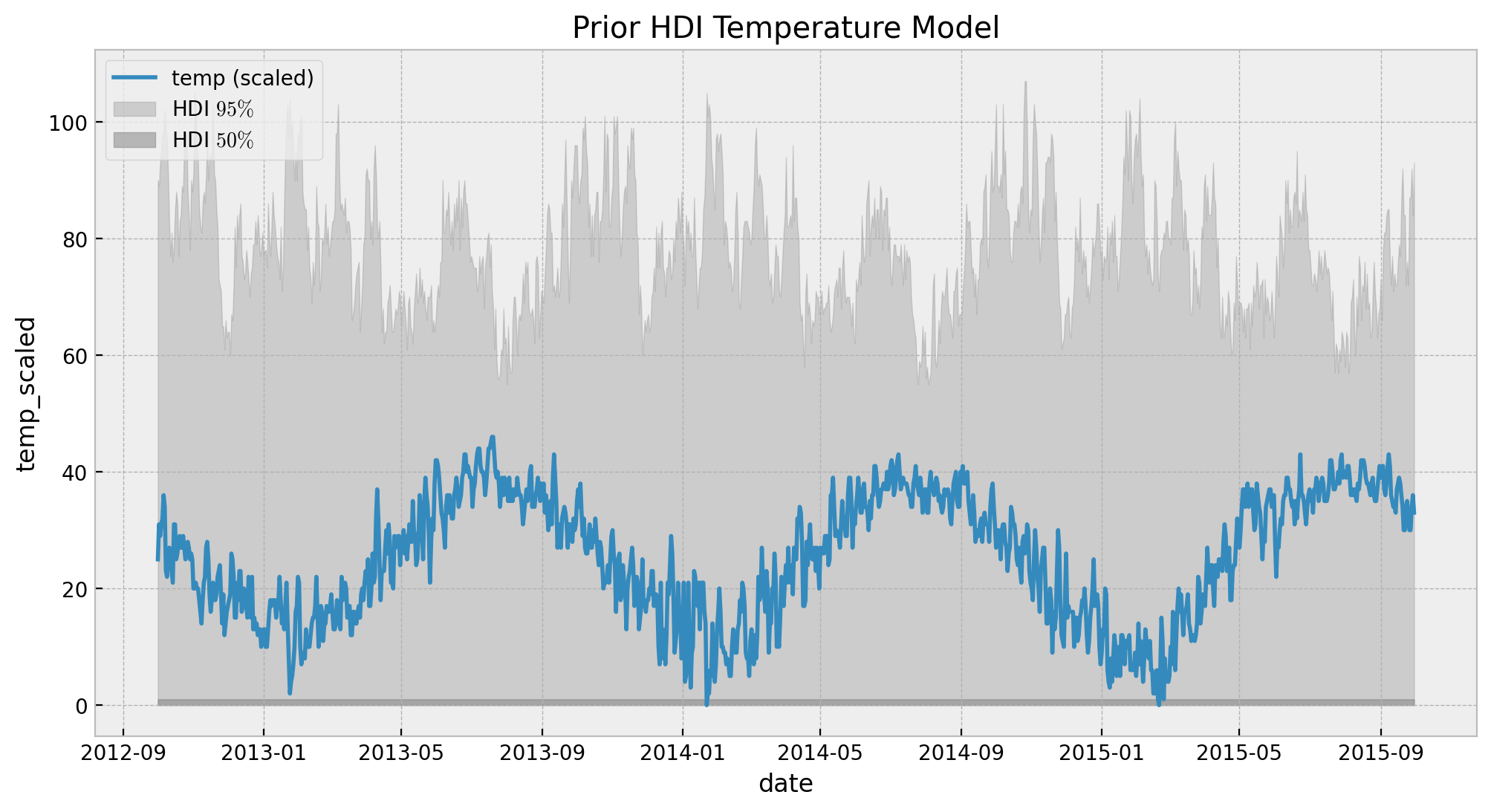

Next we run some prior predictive checks.

with temp_model:

temp_prior_predictive = pm.sample_prior_predictive(samples=1000)

fig, ax = plt.subplots()

sns.lineplot(

x="date", y="temp_scaled", data=df, color="C0", label="temp (scaled)", ax=ax

)

az.plot_hdi(

x=date,

y=temp_prior_predictive.prior_predictive["likelihood"],

hdi_prob=0.95,

color="gray",

smooth=False,

fill_kwargs={"label": r"HDI $95\%$", "alpha": 0.3},

ax=ax,

)

az.plot_hdi(

x=date,

y=temp_prior_predictive.prior_predictive["likelihood"],

hdi_prob=0.5,

color="gray",

smooth=False,

fill_kwargs={"label": r"HDI $50\%$", "alpha": 0.5},

ax=ax,

)

ax.legend(loc="upper left")

ax.set(title="Prior HDI Temperature Model")

Looks the values are feasible and not very constraining. Hence, we proceed to fit the model.

with temp_model:

temp_idata = pm.sample(

target_accept=0.9, draws=4000, chains=4, nuts_sampler="numpyro"

)

temp_posterior_predictive = pm.sample_posterior_predictive(trace=temp_idata)

Let’s see the summary and plot the trace.

az.summary(data=temp_idata, var_names=["a", "b_fourier"])

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| a | 3.146 | 0.007 | 3.134 | 3.159 | 0.0 | 0.0 | 18131.0 | 12857.0 | 1.0 |

| b_fourier[0] | -0.248 | 0.009 | -0.265 | -0.231 | 0.0 | 0.0 | 19331.0 | 12477.0 | 1.0 |

| b_fourier[1] | -0.547 | 0.010 | -0.565 | -0.530 | 0.0 | 0.0 | 17508.0 | 12869.0 | 1.0 |

| b_fourier[2] | -0.122 | 0.009 | -0.139 | -0.104 | 0.0 | 0.0 | 17170.0 | 12433.0 | 1.0 |

| b_fourier[3] | -0.049 | 0.009 | -0.066 | -0.031 | 0.0 | 0.0 | 21489.0 | 12654.0 | 1.0 |

| b_fourier[4] | -0.046 | 0.009 | -0.064 | -0.029 | 0.0 | 0.0 | 18678.0 | 13323.0 | 1.0 |

| b_fourier[5] | 0.047 | 0.009 | 0.029 | 0.065 | 0.0 | 0.0 | 19383.0 | 12526.0 | 1.0 |

| b_fourier[6] | -0.005 | 0.009 | -0.022 | 0.013 | 0.0 | 0.0 | 17961.0 | 12885.0 | 1.0 |

| b_fourier[7] | 0.046 | 0.009 | 0.028 | 0.063 | 0.0 | 0.0 | 20264.0 | 12846.0 | 1.0 |

| b_fourier[8] | -0.000 | 0.009 | -0.018 | 0.017 | 0.0 | 0.0 | 18595.0 | 12557.0 | 1.0 |

| b_fourier[9] | 0.016 | 0.009 | -0.001 | 0.034 | 0.0 | 0.0 | 17999.0 | 13554.0 | 1.0 |

| b_fourier[10] | 0.007 | 0.009 | -0.010 | 0.024 | 0.0 | 0.0 | 21195.0 | 13060.0 | 1.0 |

| b_fourier[11] | 0.017 | 0.009 | 0.001 | 0.034 | 0.0 | 0.0 | 19795.0 | 12503.0 | 1.0 |

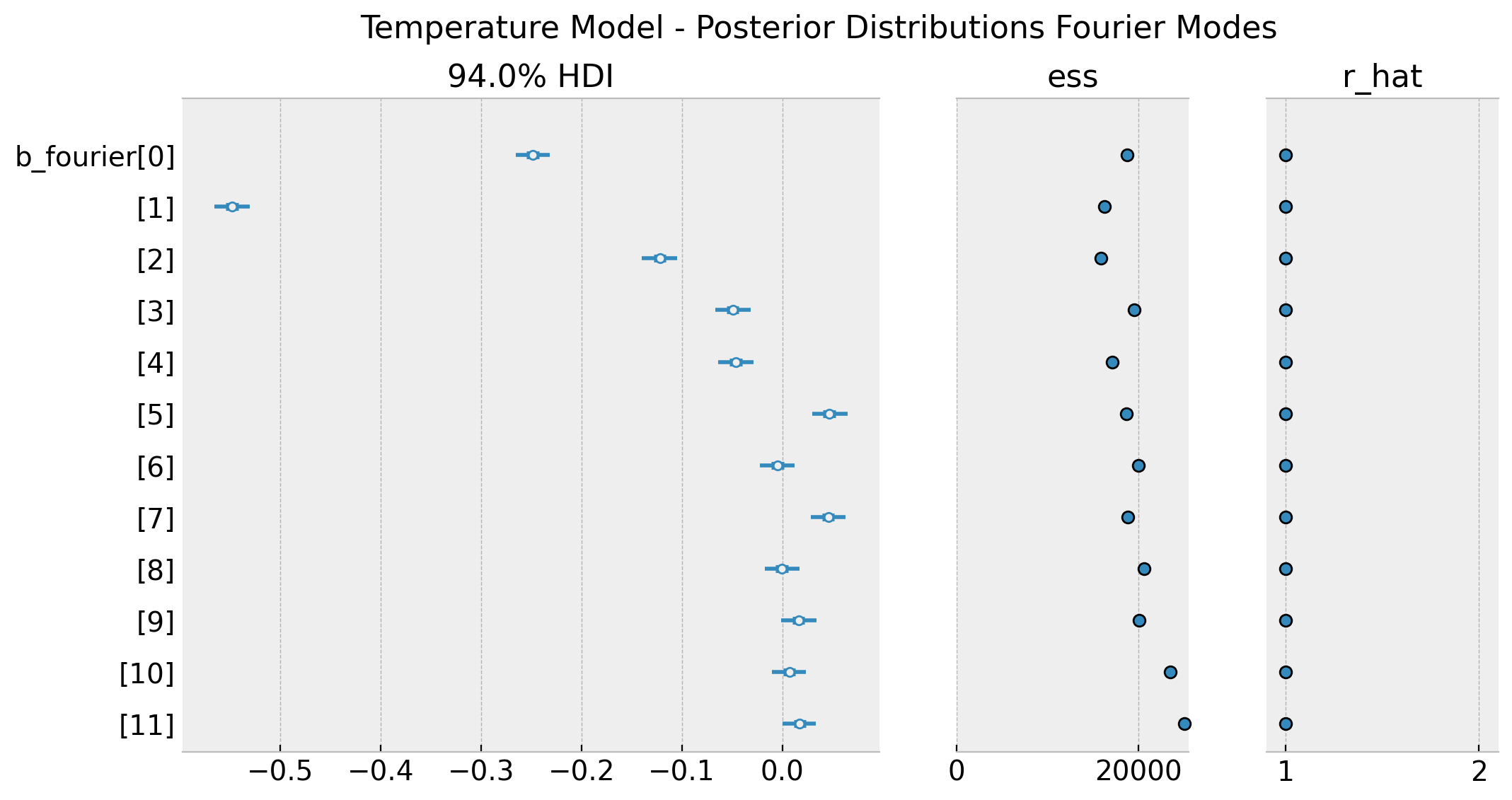

ax = az.plot_forest(

kind="forestplot",

data=temp_idata,

var_names=["b_fourier"],

combined=True,

r_hat=True,

ess=True,

figsize=(12, 6),

)

plt.gcf().suptitle(

"Temperature Model - Posterior Distributions Fourier Modes", fontsize=16

)

These distributions match the ones obtained in the original blog post (see figure Posterior linear combination of the Fourier terms for the temperature model). To end this section we simply plot the posterior predictive distribution.

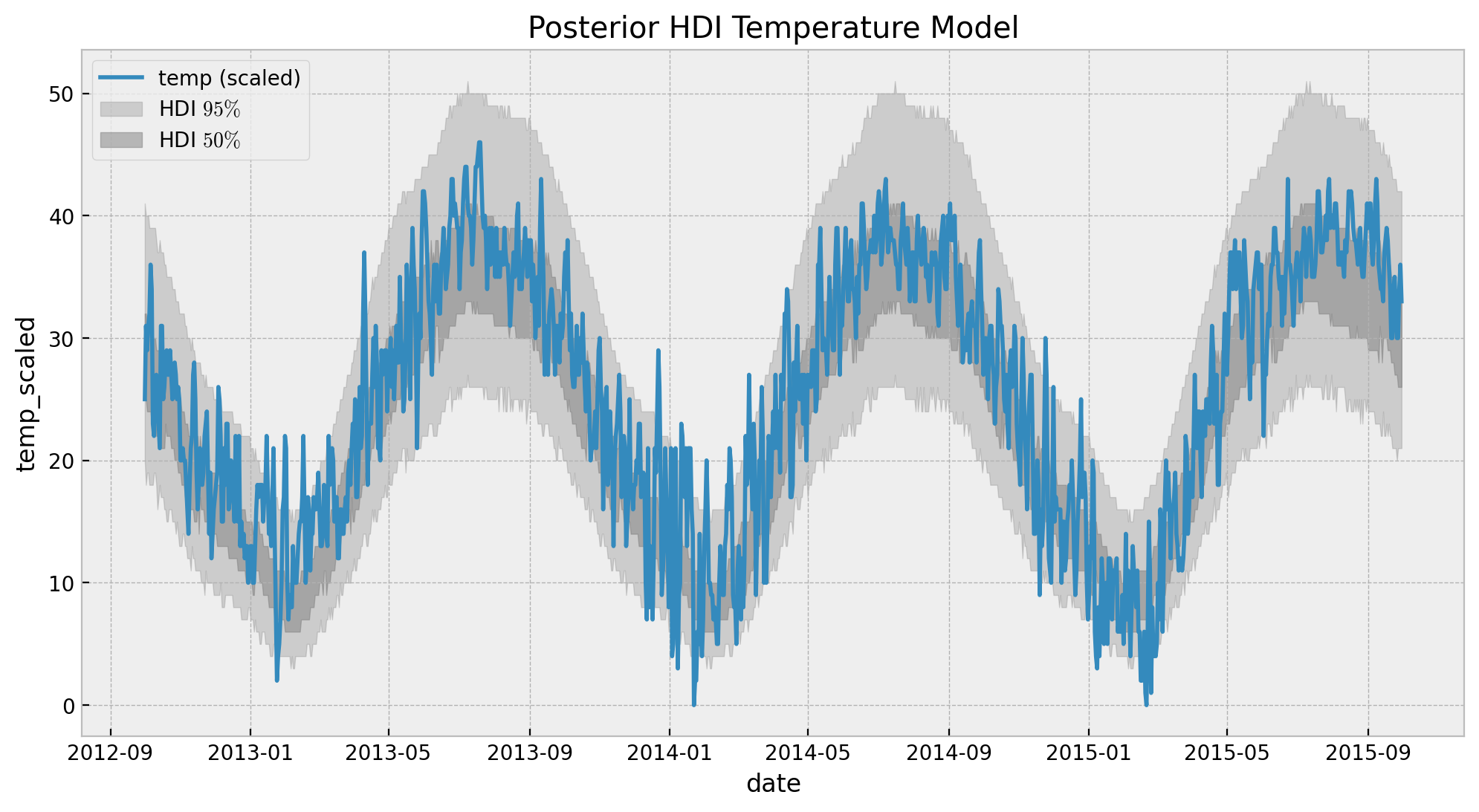

fig, ax = plt.subplots()

sns.lineplot(

x="date", y="temp_scaled", data=df, color="C0", label="temp (scaled)", ax=ax

)

az.plot_hdi(

x=date,

y=temp_posterior_predictive.posterior_predictive["likelihood"],

hdi_prob=0.95,

color="gray",

smooth=False,

fill_kwargs={"label": r"HDI $95\%$", "alpha": 0.3},

ax=ax,

)

az.plot_hdi(

x=date,

y=temp_posterior_predictive.posterior_predictive["likelihood"],

hdi_prob=0.5,

color="gray",

smooth=False,

fill_kwargs={"label": r"HDI $50\%$", "alpha": 0.5},

ax=ax,

)

ax.legend(loc="upper left")

ax.set(title="Posterior HDI Temperature Model")

Sales Model

Now we come to the core of this notebook: build the sales model using the output of the temperature model. To begin with, we define extract similar features as above but just for the training period.

date_train = df_train["date"]

sales_train = df_train["sales"]

trend_train = df_train["trend"]

dayofweek_idx_train, dayofweek_train = df_train["date"].dt.dayofweek.factorize()

periods_train = df_train["date"].dt.dayofyear / 365.25

n_order = 6

fourier_features_train = pd.DataFrame(

{

f"{func}_order_{order}": getattr(np, func)(2 * np.pi * periods_train * order)

for order in range(1, n_order + 1)

for func in ("sin", "cos")

}

)

Next we extract the means and standard deviations of the learned Fourier modes posterior distributions.

temp_model_summary = az.summary(data=temp_idata, var_names=["b_fourier"])

fourier_loc = temp_model_summary["mean"]

fourier_sd = temp_model_summary["sd"]

Let’s now be explicit about the model structure:

\[\begin{align*} \text{sales}_{t} & \sim \text{NegativeBinomial}(\mu_{t}, \alpha) \\ \mu_{t} & = (1 - \delta - \eta) \lambda_{t} + \delta \mu_{t-1} + \eta \: \text{sales}_{t-1}, \quad 0 \leq \delta \leq 1, 0 \leq \eta \leq 1 - \delta \\ \log(\lambda_{t}) & = b_{\text{trend}}\frac{t}{m} + \sum_{j=1}^{7}b_{\text{dow}, j}\text{dayofweek}_{t} + \sum_{k=1}^{K=6} b_{k}'\sin\left(\frac{2\pi k t}{m}\right) + \tilde{b}_{k} '\cos\left(\frac{2\pi k t}{m}\right), \quad m = 365.25 \\ \alpha & = \frac{1}{\tilde{\alpha}^2} \\ \delta & \sim \text{Beta}(1, 10) \\ \eta & \sim \text{Gamma}(1, 10) \\ b_{\text{trend}} & \sim \text{Normal}(0.03, 0.02) \\ b_{\text{dow}, j} & \sim \text{Normal}(4, 2) \\ b_{k}' & \sim \text{Normal}(\text{E}[b_{k}], \sqrt{\text{Var}[b_{k}]}) \\ \tilde{b}_{k}' & \sim \text{Normal}(\text{E}[\tilde{b}_{k}], \sqrt{\text{Var}[\tilde{b}_{k}]}) \\ \tilde{\alpha} & \sim \text{HalfNormal}(0.5) \end{align*}\]

In summary, we model the sales using a negative binomial likelihood. The mean \(\mu_t\) of such distribution is modeled using three components: seasonality (\(\lambda_t\)), an autoregressive term on the latent mean (\(\mu_{t - 1}\)) and an autoregressive sales model. The seasonality component includes a linear trend, in-week seasonality via day of week indicator functions and long term seasonality modeled using Fourier modes. The key point to note is that the prior of such Fourier modes are actually determined by the posterior distribution obtained from the temperature model.

Now we write the model above in PyMC.

eps = np.finfo(float).eps

coords = {

"fourier_features": np.arange(2 * n_order),

}

with pm.Model(coords=coords) as sales_model:

# --- data containers ---

sales_model.add_coord(name="date", values=date_train, mutable=True)

sales_model.add_coord(name="dayofweek", values=dayofweek_train, mutable=True)

dayofweek_data = pm.MutableData(

name="dayofweek_data", value=dayofweek_idx_train, dims="date"

)

fourier_features_data = pm.MutableData(

name="fourier_features_data",

value=fourier_features_train.to_numpy().T,

dims=("fourier_features", "date"),

)

trend_data = pm.MutableData(name="trend", value=trend_train, dims="date")

sales_data = pm.MutableData(name="sales", value=sales_train, dims="date")

# --- priors ---

delta = pm.Beta(name="delta", alpha=1, beta=10)

eta = pm.Gamma(name="eta", alpha=0.5, beta=10)

b_fourier = pm.Normal(

name="b_fourier", mu=fourier_loc, sigma=fourier_sd, dims="fourier_features"

)

b_dayofweek = pm.Normal(name="b_dayofweek", mu=4, sigma=2, dims="dayofweek")

b_trend = pm.Normal(name="b_trend", mu=0.03, sigma=0.02)

alpha_inv = pm.HalfNormal(name="alpha_inv", sigma=0.5)

# --- model parametrization ---

## parameters constraints

pm.Potential(name="constrain", var=pt.switch(eta > 1 - delta, -np.inf, 0))

# transferred trend and seasonality

fourier_contribution = pm.Deterministic(

name="fourier_contribution",

var=pm.math.dot(b_fourier, fourier_features_data),

dims="date",

)

dayofweek_contribution = pm.Deterministic(

name="dayofweek_contribution", var=b_dayofweek[dayofweek_data], dims="date"

)

trend_contribution = pm.Deterministic(

name="trend_contribution", var=b_trend * trend_data, dims="date"

)

seasonality = pm.Deterministic(

name="seasonality",

var=pm.math.exp(

fourier_contribution + dayofweek_contribution + trend_contribution

),

dims="date",

)

## damped dynamic mean

mu0 = pm.MutableData(

name="mu0", value=np.zeros(sales_data.shape.eval()[0]), dims="date"

)

mu0 = pt.set_subtensor(mu0[0], sales_data[0])

def one_step(seasonality_t, sales_tm1, mu_tm1, delta, eta):

return (1 - delta - eta) * seasonality_t + delta * mu_tm1 + eta * sales_tm1

outputs, _ = pytensor.scan(

fn=one_step,

sequences=[

dict(input=seasonality[1:], taps=[0]),

dict(input=sales_data, taps=[-1]),

],

outputs_info=dict(initial=mu0, taps=[-1]),

non_sequences=[delta, eta],

strict=True,

)

mu = pm.Deterministic(

name="mu", var=pt.set_subtensor(mu0[1:], outputs[:, 0]), dims="date"

)

alpha = pm.Deterministic(name="alpha", var=1 / (pm.math.sqr(alpha_inv) + eps))

# --- likelihood ---

pm.NegativeBinomial(

name="likelihood", alpha=alpha, mu=mu + eps, observed=sales_data, dims="date"

)

pm.model_to_graphviz(sales_model)

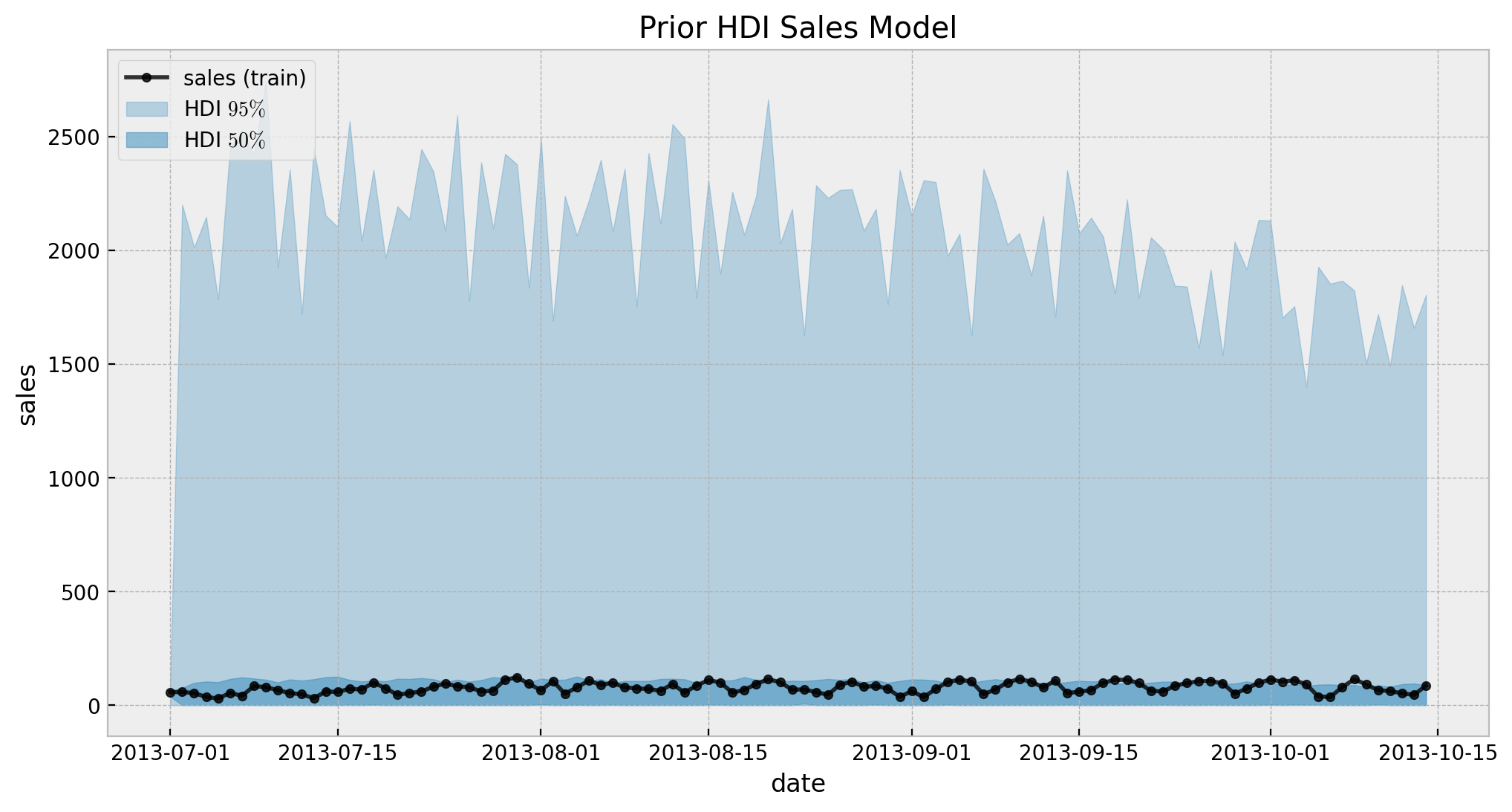

As always, is always good to run prior predictive checks before fitting the model.

with sales_model:

sales_prior_predictive = pm.sample_prior_predictive(samples=1000)

fig, ax = plt.subplots()

sns.lineplot(

x="date",

y="sales",

data=df_train,

marker="o",

color="black",

alpha=0.8,

markersize=4,

markeredgecolor="black",

label="sales (train)",

)

az.plot_hdi(

x=date_train,

y=sales_prior_predictive.prior_predictive["likelihood"],

hdi_prob=0.95,

color="C0",

smooth=False,

fill_kwargs={"label": r"HDI $95\%$", "alpha": 0.3},

ax=ax,

)

az.plot_hdi(

x=date_train,

y=sales_prior_predictive.prior_predictive["likelihood"],

hdi_prob=0.5,

color="C0",

smooth=False,

fill_kwargs={"label": r"HDI $50\%$", "alpha": 0.5},

ax=ax,

)

ax.legend(loc="upper left")

ax.set(title="Prior HDI Sales Model")

Overall, looks ok. Now we fit the model.

with sales_model:

sales_idata = pm.sample(

target_accept=0.97, draws=4_000, chains=4, nuts_sampler="numpyro"

)

sales_posterior_predictive = pm.sample_posterior_predictive(trace=sales_idata)# compute divergences

sales_idata["sample_stats"]["diverging"].sum().item()0We can now look into the posterior diagnostic and posterior distributions.

var_names = ["b_fourier", "b_dayofweek", "b_trend", "delta", "eta", "alpha"]az.summary(data=sales_idata, var_names=var_names)

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| b_fourier[0] | -0.250 | 0.009 | -0.267 | -0.233 | 0.000 | 0.000 | 20700.0 | 12388.0 | 1.0 |

| b_fourier[1] | -0.545 | 0.010 | -0.563 | -0.525 | 0.000 | 0.000 | 19895.0 | 10213.0 | 1.0 |

| b_fourier[2] | -0.122 | 0.009 | -0.139 | -0.106 | 0.000 | 0.000 | 20720.0 | 11315.0 | 1.0 |

| b_fourier[3] | -0.052 | 0.009 | -0.070 | -0.035 | 0.000 | 0.000 | 21254.0 | 11850.0 | 1.0 |

| b_fourier[4] | -0.044 | 0.009 | -0.061 | -0.028 | 0.000 | 0.000 | 21177.0 | 11786.0 | 1.0 |

| b_fourier[5] | 0.049 | 0.009 | 0.033 | 0.066 | 0.000 | 0.000 | 20297.0 | 11523.0 | 1.0 |

| b_fourier[6] | -0.006 | 0.009 | -0.023 | 0.011 | 0.000 | 0.000 | 21000.0 | 11436.0 | 1.0 |

| b_fourier[7] | 0.046 | 0.009 | 0.029 | 0.063 | 0.000 | 0.000 | 19824.0 | 10806.0 | 1.0 |

| b_fourier[8] | -0.000 | 0.009 | -0.017 | 0.017 | 0.000 | 0.000 | 22771.0 | 11765.0 | 1.0 |

| b_fourier[9] | 0.017 | 0.009 | -0.000 | 0.033 | 0.000 | 0.000 | 20915.0 | 10981.0 | 1.0 |

| b_fourier[10] | 0.006 | 0.009 | -0.011 | 0.023 | 0.000 | 0.000 | 23830.0 | 11162.0 | 1.0 |

| b_fourier[11] | 0.014 | 0.009 | -0.002 | 0.031 | 0.000 | 0.000 | 20650.0 | 11412.0 | 1.0 |

| b_dayofweek[0] | 4.626 | 0.252 | 4.183 | 5.109 | 0.005 | 0.003 | 2962.0 | 3331.0 | 1.0 |

| b_dayofweek[1] | 4.296 | 0.238 | 3.881 | 4.768 | 0.003 | 0.002 | 6852.0 | 5664.0 | 1.0 |

| b_dayofweek[2] | 3.938 | 0.417 | 3.260 | 4.552 | 0.008 | 0.005 | 7202.0 | 3146.0 | 1.0 |

| b_dayofweek[3] | 3.531 | 0.645 | 2.367 | 4.359 | 0.012 | 0.009 | 6337.0 | 3276.0 | 1.0 |

| b_dayofweek[4] | 3.254 | 0.852 | 1.552 | 4.219 | 0.016 | 0.011 | 3742.0 | 3953.0 | 1.0 |

| b_dayofweek[5] | 1.420 | 1.096 | -0.588 | 3.208 | 0.012 | 0.009 | 8685.0 | 9789.0 | 1.0 |

| b_dayofweek[6] | 3.692 | 0.413 | 3.064 | 4.208 | 0.010 | 0.007 | 4822.0 | 1855.0 | 1.0 |

| b_trend | 0.032 | 0.020 | -0.006 | 0.069 | 0.000 | 0.000 | 19898.0 | 11206.0 | 1.0 |

| delta | 0.385 | 0.144 | 0.105 | 0.644 | 0.003 | 0.002 | 2913.0 | 4912.0 | 1.0 |

| eta | 0.331 | 0.092 | 0.156 | 0.504 | 0.001 | 0.001 | 6893.0 | 7681.0 | 1.0 |

| alpha | 22.927 | 4.533 | 14.785 | 31.386 | 0.039 | 0.028 | 13487.0 | 11620.0 | 1.0 |

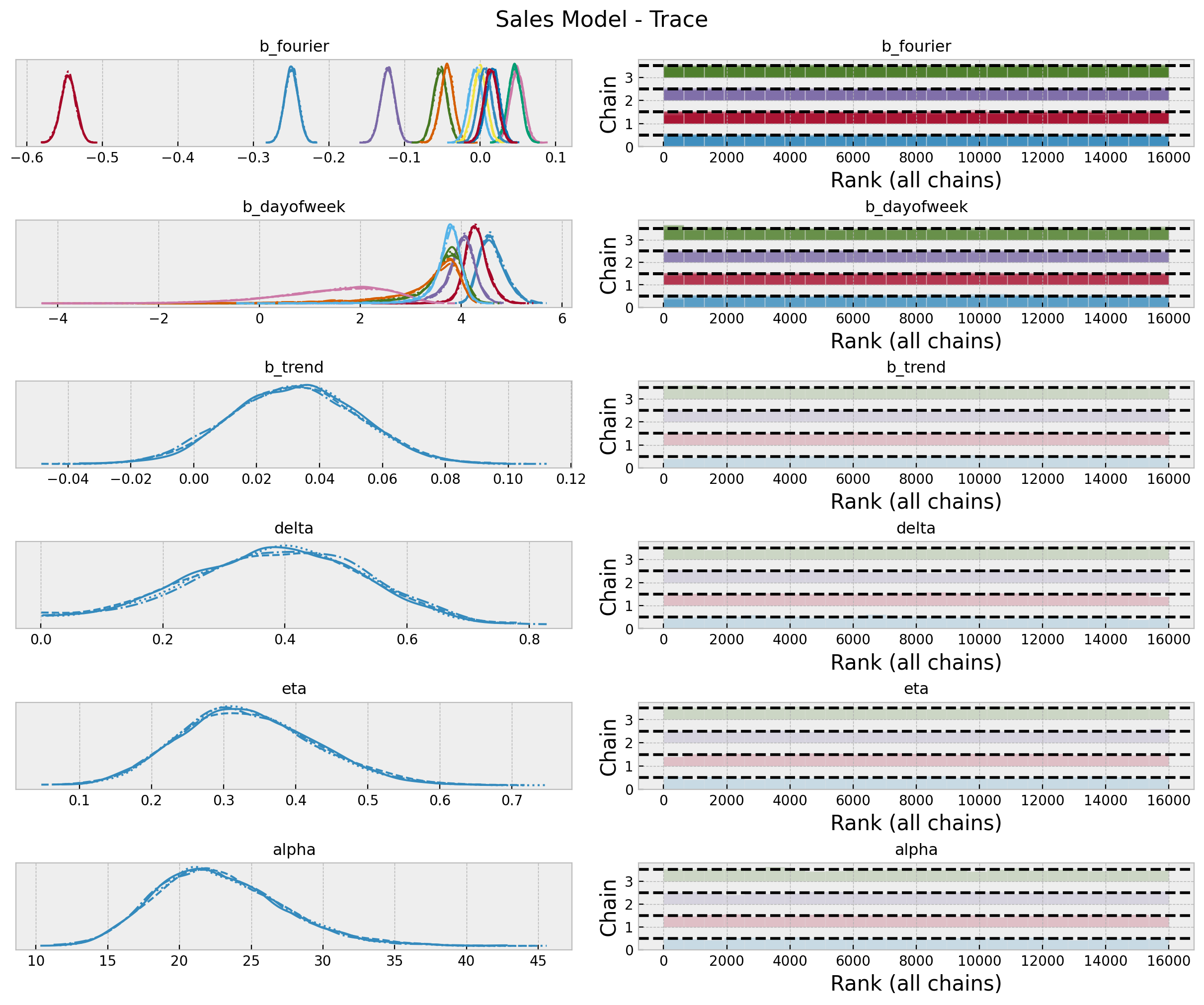

axes = az.plot_trace(

data=sales_idata,

var_names=var_names,

compact=True,

kind="rank_bars",

backend_kwargs={"figsize": (12, 10), "layout": "constrained"},

)

plt.gcf().suptitle("Sales Model - Trace", fontsize=16)

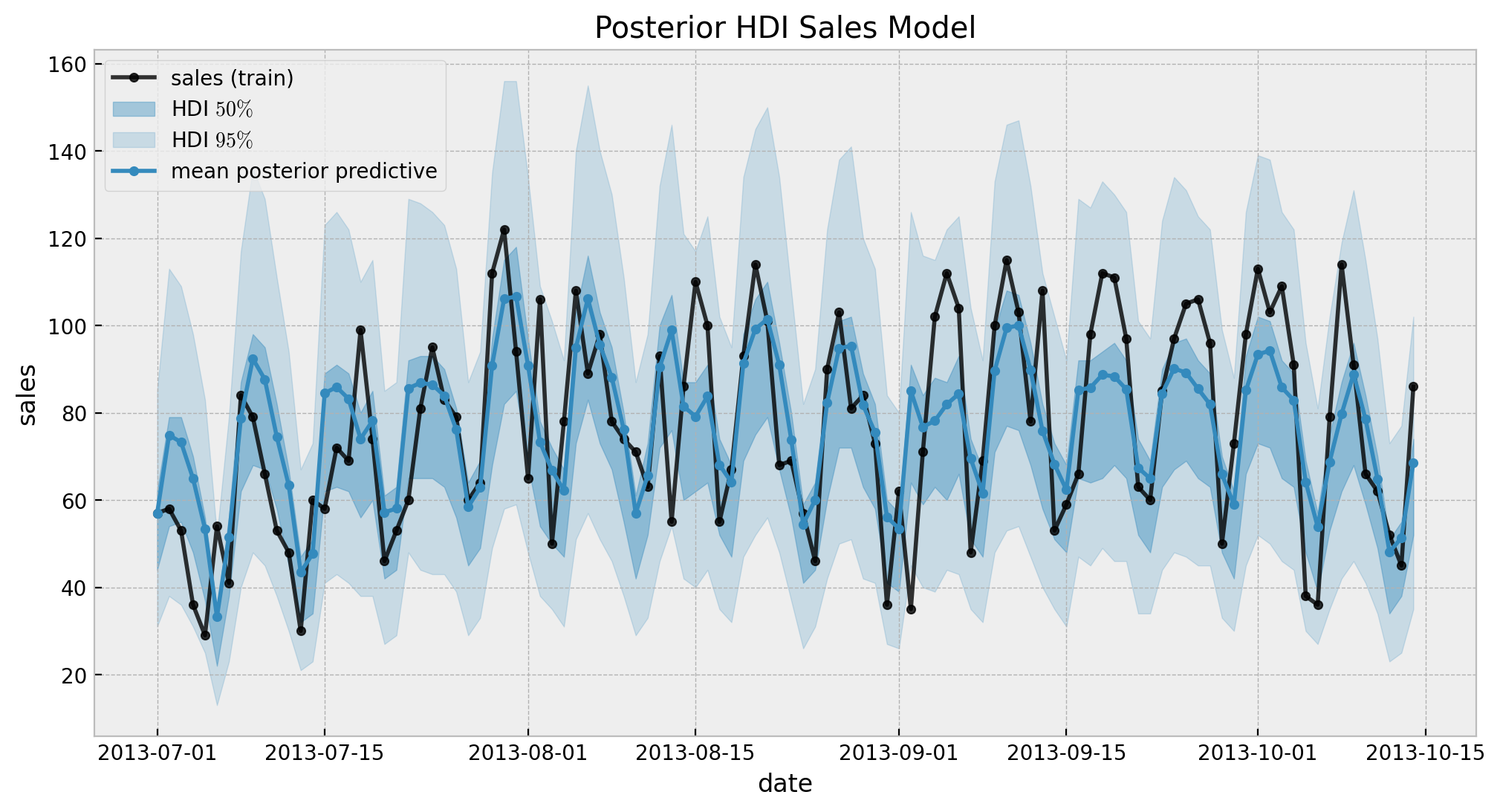

Now let’s look into the in-sample posterior predictive distribution.

fig, ax = plt.subplots()

sns.lineplot(

x="date",

y="sales",

data=df_train,

marker="o",

color="black",

alpha=0.8,

markersize=4,

markeredgecolor="black",

label="sales (train)",

)

az.plot_hdi(

x=date_train,

y=sales_posterior_predictive.posterior_predictive["likelihood"],

hdi_prob=0.5,

color="C0",

smooth=False,

fill_kwargs={"label": r"HDI $50\%$", "alpha": 0.4},

ax=ax,

)

az.plot_hdi(

x=date_train,

y=sales_posterior_predictive.posterior_predictive["likelihood"],

hdi_prob=0.95,

color="C0",

smooth=False,

fill_kwargs={"label": r"HDI $95\%$", "alpha": 0.2},

ax=ax,

)

sns.lineplot(

x=date_train,

y=sales_posterior_predictive.posterior_predictive["likelihood"].mean(

dim=("chain", "draw")

),

marker="o",

color="C0",

markersize=4,

markeredgecolor="C0",

label="mean posterior predictive",

ax=ax,

)

ax.legend(loc="upper left")

ax.set(title="Posterior HDI Sales Model")

The results look good. Still, we can not see the long term effect (yet!). Let’s now generate out-of-sample predictions.

Remark: Thank you Jonathan Dekermanjian for improving the code below to make the out-of-sample predictions code more efficient 🤜 🤛 (see this issue)!

# extract data needed to make predictions

date_test = df_test["date"]

sales_test = df_test["sales"]

trend_test = df_test["trend"]

dayofweek_idx_test, dayofweek_test = df_test["date"].dt.dayofweek.factorize()

periods_test = df_test["date"].dt.dayofyear / 365.25

fourier_features_test = pd.DataFrame(

{

f"{func}_order_{order}": getattr(np, func)(2 * np.pi * periods_test * order)

for order in range(1, n_order + 1)

for func in ("sin", "cos")

}

)

# posterior predictive (out-of-sample)

with sales_model:

pm.set_data(

new_data={

"sales": sales_test,

"trend": trend_test,

"dayofweek_data": dayofweek_idx_test,

"fourier_features_data": fourier_features_test.to_numpy().T,

"mu0": eps * np.zeros(sales_test.size),

},

coords={"date": date_test, "dayofweek": dayofweek_test},

)

sales_idata.extend(

other=pm.sample_posterior_predictive(

trace=sales_idata,

var_names=["likelihood"],

idata_kwargs={"coords": {"date": date_test, "dayofweek": dayofweek_test}},

),

join="right",

)

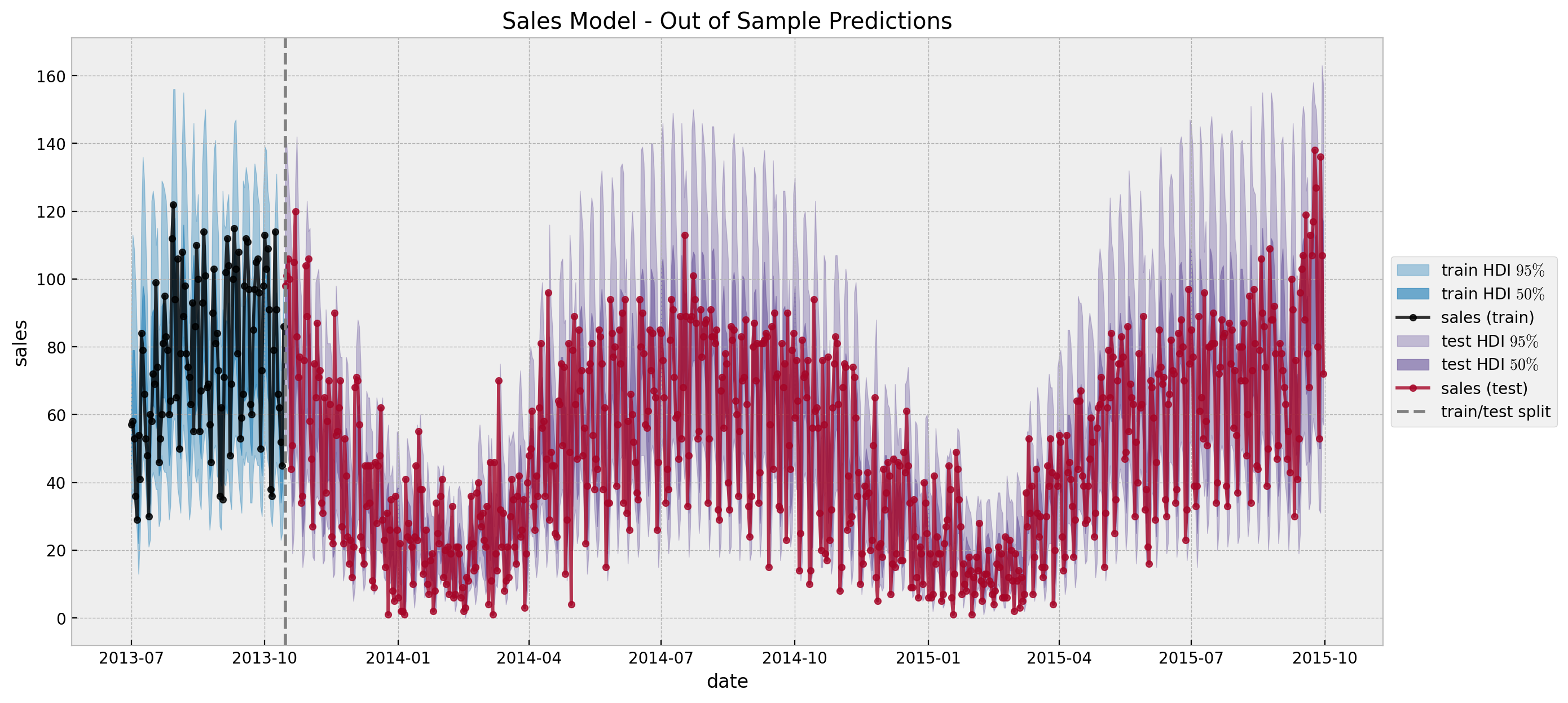

We are ready to see the final results:

fig, ax = plt.subplots(figsize=(15, 7))

az.plot_hdi(

x=date_train,

y=sales_posterior_predictive["posterior_predictive"]["likelihood"],

hdi_prob=0.95,

color="C0",

fill_kwargs={"alpha": 0.4, "label": r"train HDI $95\%$"},

smooth=False,

ax=ax,

)

az.plot_hdi(

x=date_train,

y=sales_posterior_predictive["posterior_predictive"]["likelihood"],

hdi_prob=0.5,

color="C0",

fill_kwargs={"alpha": 0.7, "label": r"train HDI $50\%$"},

smooth=False,

ax=ax,

)

sns.lineplot(

x="date",

y="sales",

data=df_train,

marker="o",

color="black",

alpha=0.8,

markersize=4,

markeredgecolor="black",

label="sales (train)",

ax=ax,

)

az.plot_hdi(

x=date_test,

y=sales_idata["posterior_predictive"]["likelihood"],

hdi_prob=0.95,

color="C2",

fill_kwargs={"alpha": 0.4, "label": r"test HDI $95\%$"},

smooth=False,

ax=ax,

)

az.plot_hdi(

x=date_test,

y=sales_idata["posterior_predictive"]["likelihood"],

hdi_prob=0.5,

color="C2",

fill_kwargs={"alpha": 0.7, "label": r"test HDI $50\%$"},

smooth=False,

ax=ax,

)

sns.lineplot(

x="date",

y="sales",

data=df_test,

marker="o",

color="C1",

alpha=0.8,

markersize=4,

markeredgecolor="C1",

label="sales (test)",

ax=ax,

)

ax.axvline(x=train_test_date, color="gray", linestyle="--", label="train/test split")

ax.legend(loc="center left", bbox_to_anchor=(1, 0.5))

ax.set(title="Sales Model - Out of Sample Predictions")

Yay! These predictions coincide with the original post Modeling Short Time Series with Prior Knowledge by Tim Radtke.

Appendix: Scan Operator

In this section I simply present some code which helped me with the recursive step of the model defined using the pytensor.scan operator. The documentation page scan - Looping in PyTensor is definitively a good starting point. It provides many examples and really tries to build some intuition. Nevertheless, to really understand what is going on I had to try it myself! One particular example that helped me for this special use case was the one on Multiple outputs, several taps values - Recurrent Neural Network with Scan. It provides exactly what I needed to implement the model! The idea is to explicitly define a function with the recursive step. In our case is simply:

def one_step(seasonality_t, sales_tm1, alpha_tm1, delta, eta):

return (1 - delta - eta) * seasonality_t + delta * alpha_tm1 + eta * sales_tm1

We can run this function a couple of times for testing purposes:

mu0 = pt.zeros(sales_data.shape.eval()[0])

mu0 = pt.set_subtensor(mu0[0], sales_data[0])

for t in range(1, 20):

step_output = one_step(seasonality[t], sales_data[t - 1], mu0[t - 1], delta, eta)

mu0 = pt.set_subtensor(mu0[t], step_output)

mu0.eval()

array([ 57. , 46.35528237, 77.36461315, 62.5669635 , 206.03102471, 60.91539446, 33.06142701, 38.64463848, 48.69596301, 84.01553658, 67.9182289 , 214.42825066, 67.67288707, 28.97307982, 42.4934078 , 43.52909534, 81.50515206, 68.25865339, 226.84680599, 77.11496603, 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ])

Now, to compare the result let’s run the scan code:

outputs, _ = pytensor.scan(

fn=one_step,

sequences=[

dict(input=seasonality[1:], taps=[-0]),

dict(input=sales_data, taps=[-1]),

],

outputs_info=dict(initial=mu0, taps=[-1]),

non_sequences=[delta, eta],

strict=True,

)

outputs.eval()array([[46.35528237, 43.69484783, 51.44501065, ..., 32.10927093, 32.10927093, 32.10927093], [77.36461315, 76.69969067, 78.63668906, ..., 73.80410715, 73.80410715, 73.80410715], [62.5669635 , 62.4007794 , 62.88489346, ..., 61.67708614, 61.67708614, 61.67708614], ..., [59.69723366, 59.69723366, 59.69723366, ..., 59.69723366, 59.69723366, 59.69723366], [31.08826576, 31.08826576, 31.08826576, ..., 31.08826576, 31.08826576, 31.08826576], [33.81745512, 33.81745512, 33.81745512, ..., 33.81745512, 33.81745512, 33.81745512]])

Not that the naïve choice output[-1] is not the correct choice (of course I made this mistake 🙈). The correct choice is to use the output[:, 0] to get the last element of the output.

outputs[:, 0].eval()array([ 46.35528237, 77.36461315, 62.5669635 , 206.03102471, 60.91539446, 33.06142701, 38.64463848, 48.69596301, 84.01553658, 67.9182289 , 214.42825066, 67.67288707, 28.97307982, 42.4934078 , 43.52909534, 81.50515206, 68.25865339, 226.84680599, 77.11496603, 35.2410469 , 42.38604802, 43.98564908, 83.71434839, 75.03218001, 223.71559658, 77.53323883, 38.71352354, 45.72966074, 57.26447802, 96.33602292, 77.50433362, 217.07865088, 82.40460194, 37.41142948, 48.41411987, 56.58039678, 87.00310458, 75.45419002, 215.44805382, 74.15228365, 40.34252016, 44.97789006, 51.59072859, 76.10426498, 68.99419101, 216.71068033, 80.73103627, 37.96599247, 44.80732999, 51.05013976, 88.93101823, 75.04113315, 203.22957544, 69.7566518 , 35.59106705, 38.5982584 , 48.3188339 , 84.33208137, 68.31739529, 201.33341532, 70.20431736, 30.49282447, 40.79887753, 35.11528204, 72.23567431, 69.8326729 , 205.21856554, 78.67772541, 35.45084071, 43.40436573, 51.28585601, 86.16062643, 73.08332093, 194.97761923, 77.05595542, 36.19211187, 40.85234724, 42.09669308, 78.93666395, 72.99853526, 200.12680735, 75.62789428, 38.19101915, 41.24660307, 46.48963647, 78.82210601, 70.67371747, 194.62390142, 73.95875205, 34.50534998, 43.01153049, 49.63817769, 82.14963965, 70.18719913, 190.02505947, 71.5217414 , 30.8291644 , 32.42343611, 41.73709566, 78.56230307, 65.24091702, 171.32735382, 59.69723366, 31.08826576, 33.81745512])

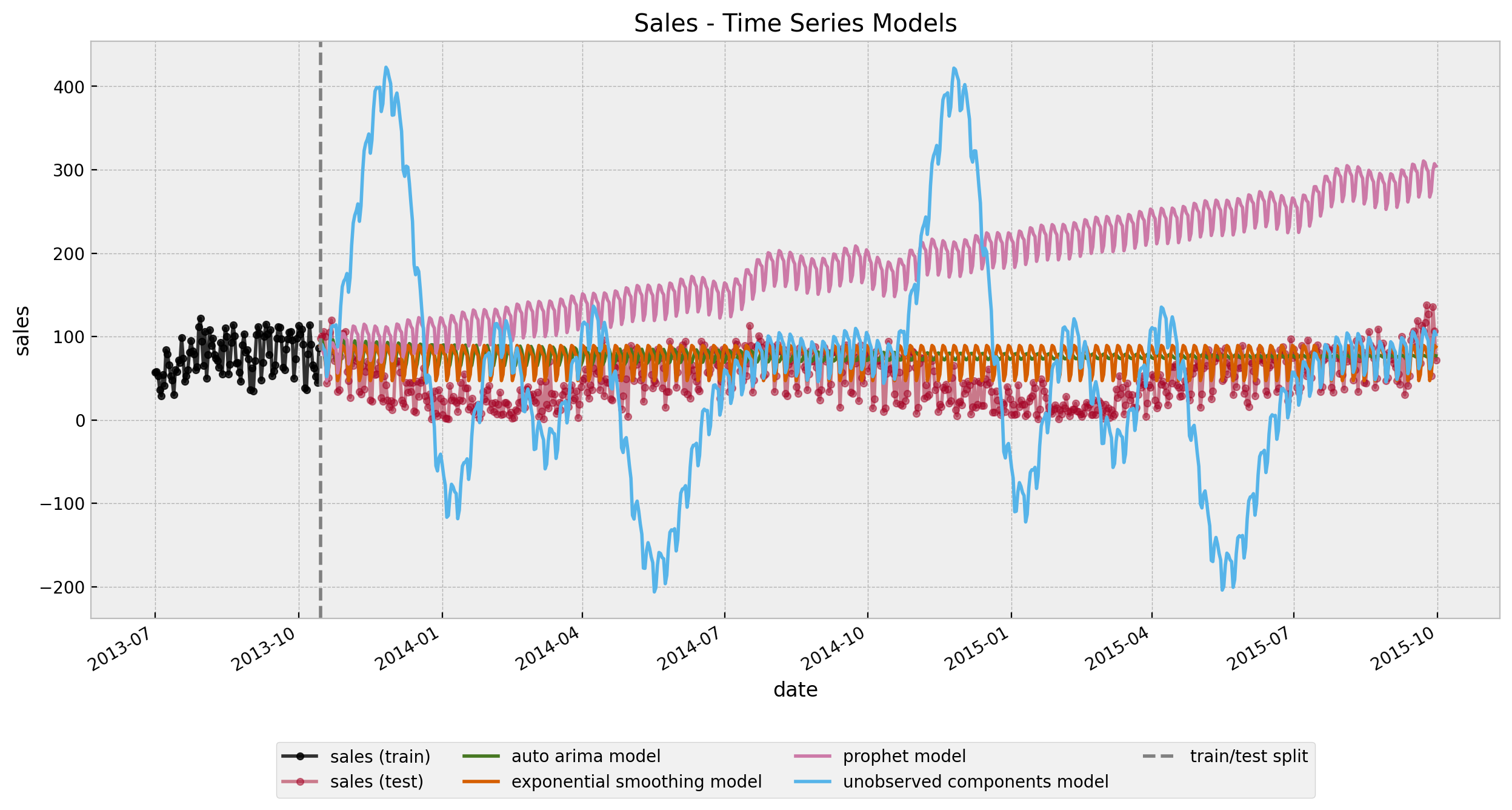

Appendix B: Simple Time Series Forecasting Models

Just for the sake of completeness we try to fit some time series forecasting models using sktime. Note how easy and convenient the API is :) !

from sktime.forecasting.arima import AutoARIMA

from sktime.forecasting.base import ForecastingHorizon

from sktime.forecasting.exp_smoothing import ExponentialSmoothing

from sktime.forecasting.fbprophet import Prophet

from sktime.forecasting.structural import UnobservedComponents

y_train = df_train.set_index("date")["sales"]

y_train.index.freq = "D"

y_test = df_test.set_index("date")["sales"]

y_test.index.freq = "D"

fh = ForecastingHorizon(y_test.index, is_relative=False)

# arima model

arima_forecaster = AutoARIMA()

arima_forecaster.fit(y=y_train)

y_pred_arima = arima_forecaster.predict(fh=fh)

# exponential smoothing model

es_forecaster = ExponentialSmoothing(seasonal="additive", sp=7)

es_forecaster.fit(y=y_train)

y_pred_es = es_forecaster.predict(fh=fh)

# prophet model

p_forecaster = Prophet(weekly_seasonality=True, yearly_seasonality=True)

p_forecaster.fit(y=y_train)

y_pred_p = p_forecaster.predict(fh=fh)

# state space model (unobserved components)

uc_forecaster = UnobservedComponents(

level="local level",

freq_seasonal=[{"period": 7, "harmonics": 6}, {"period": 365.25, "harmonics": 6}],

)

uc_forecaster.fit(y=y_train)

y_pred_uc = uc_forecaster.predict(fh=fh)Let’s visualize the predictions.

fig, ax = plt.subplots(figsize=(15, 7))

sns.lineplot(

x="date",

y="sales",

data=df_train,

marker="o",

color="black",

alpha=0.8,

markersize=4,

markeredgecolor="black",

label="sales (train)",

ax=ax,

)

sns.lineplot(

x="date",

y="sales",

data=df_test,

marker="o",

color="C1",

alpha=0.5,

markersize=4,

markeredgecolor="C1",

label="sales (test)",

ax=ax,

)

y_pred_arima.plot(label="auto arima model", color="C3", ax=ax)

y_pred_es.plot(label="exponential smoothing model", color="C4", ax=ax)

y_pred_p.plot(label="prophet model", color="C5", ax=ax)

y_pred_uc.plot(label="unobserved components model", color="C6", ax=ax)

ax.axvline(x=train_test_date, color="gray", linestyle="--", label="train/test split")

ax.legend(loc="upper center", bbox_to_anchor=(0.5, -0.2), ncol=4)

ax.set(title="Sales - Time Series Models")

None of them capture both the short and long term seasonality (of course! there is simply not enough data!).