Updated Version: 2019/09/21 (Extension + Minor Corrections)

After a sequence of preliminary posts (Sampling from a Multivariate Normal Distribution and Regularized Bayesian Regression as a Gaussian Process), I want to explore a concrete example of a gaussian process regression. We continue following Gaussian Processes for Machine Learning, Ch 2.

Other recommended references are:

- Gaussian Processes for Timeseries Modeling by S. Roberts, M. Osborne, M. Ebden, S. Reece, N. Gibson & S. Aigrain.

- Bayesian Data Analyis, Chapter 21 by by Andrew Gelman, John Carlin, Hal Stern, David Dunson, Aki Vehtari, and Donald Rubin.

Prepare Notebook

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_style(

style='darkgrid',

rc={'axes.facecolor': '.9', 'grid.color': '.8'}

)

sns.set_palette(palette='deep')

%matplotlib inline

plt.rcParams['figure.figsize'] = [12, 6]

plt.rcParams['figure.dpi'] = 100Generate Data Samples

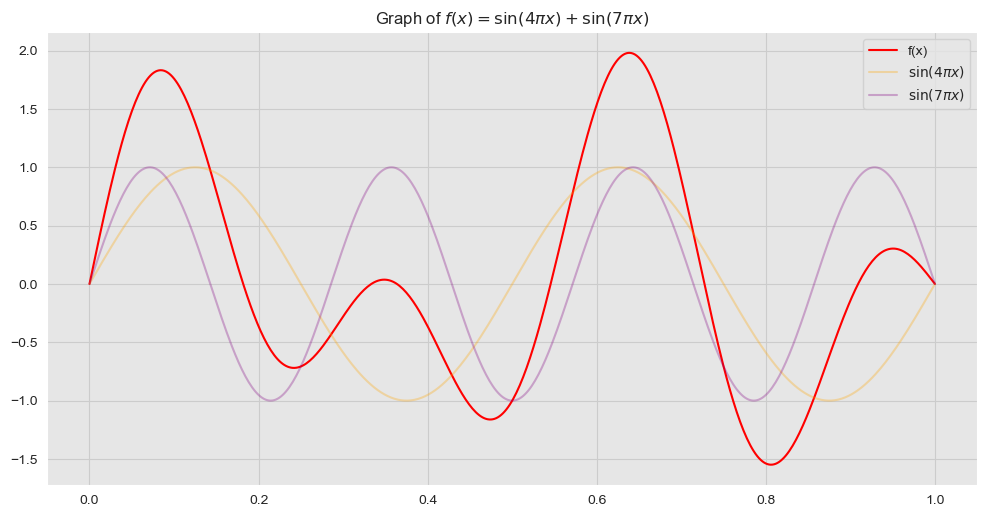

We consider de model \(y = f(x) + \varepsilon\), where \(\varepsilon \sim N(0, \sigma_n)\). Here \(f\) does not need to be a linear function of \(x\). As a concrete example, let us consider (1-dim problem)

\[ f(x) = \sin(4\pi x) + \sin(7\pi x) \]

# Define dimension.

d = 1

# Number of samples (training set).

n = 500

x = np.linspace(start=0, stop=1, num=n)

def f(x):

f = np.sin((4*np.pi)*x) + np.sin((7*np.pi)*x)

return(f)

f_x = f(x)

fig, ax = plt.subplots()

# Plot function f.

sns.lineplot(x=x, y=f_x, color='red', label = 'f(x)', ax=ax)

# Plot function components.

sns.lineplot(x=x, y=np.sin((4*np.pi)*x), color='orange', label='$\sin(4 \pi x)$', alpha=0.3, ax=ax)

sns.lineplot(x=x, y=np.sin((7*np.pi)*x), color='purple', label='$\sin(7 \pi x)$', alpha=0.3, ax=ax)

ax.legend(loc='upper right')

ax.set_title(r'Graph of $f(x) = \sin(4\pi x) + \sin(7\pi x)$');

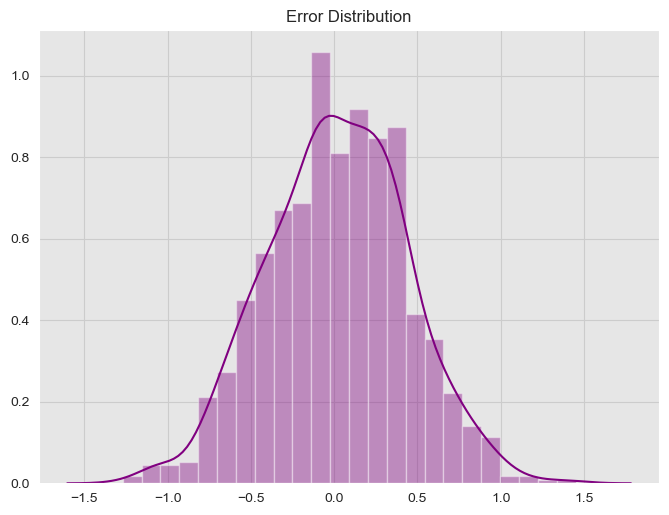

Next, we generate some training sample observations:

# Error standard deviation.

sigma_n = 0.4

# Errors.

epsilon = np.random.normal(loc=0, scale=sigma_n, size=n)

# Observed target variable.

y = f_x + epsilonLet us see the error distribution.

fig, ax = plt.subplots()

# Plot errors.

sns.distplot(epsilon, ax=ax)

ax.set(title='Error Distribution');

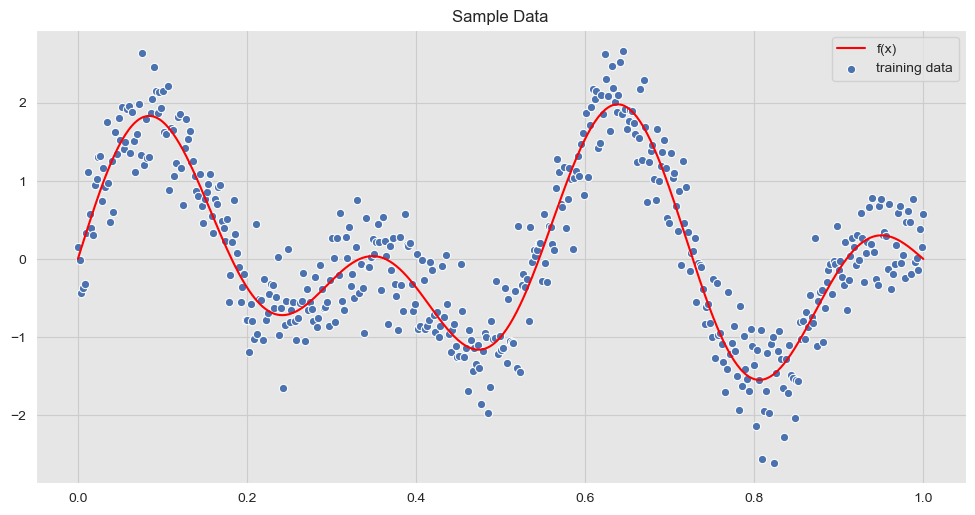

We now visualize the sample data:

fig, ax = plt.subplots()

# Plot training data.

sns.scatterplot(x=x, y=y, label='training data', ax=ax);

# Plot "true" linear fit.

sns.lineplot(x=x, y=f_x, color='red', label='f(x)', ax=ax);

ax.set(title='Sample Data')

ax.legend(loc='upper right');

We now consider test data points on which we want to generate predictions.

n_star = 100

x_star = np.linspace(start=0, stop=1, num=n_star)Kernel Function

Recall that a gaussian process is completely specified by its mean function and covariance (we usually take the mean equal to zero, although it is not necessary). A common choice is the squared exponential,

\[ \text{cov}(f(x_p), f(x_q)) = k_{\sigma_f, \ell}(x_p, x_q) = \sigma_f \exp\left(-\frac{1}{2\ell^2} ||x_p - x_q||^2\right) \]

where \(\sigma_f , \ell >0\) are hyperparameters. Observe that the covariance between two samples are modeled as a function of the inputs.

Remark: “It can be shown that the squared exponential covariance function corresponds to a Bayesian linear regression model with an infinite basis functions number of basis function.” (Gaussian Processes for Machine Learning, Ch 2.2)

def kernel_function(x, y, sigma_f=1, l=1):

"""Define squared exponential kernel function."""

kernel = sigma_f * np.exp(- (np.linalg.norm(x - y)**2) / (2 * l**2))

return kernelLet us select the parameters:

l = 0.1

sigma_f = 2Compute Covariance Matrices

A key observation, as illustrated in Regularized Bayesian Regression as a Gaussian Process, is that the specification of the covariance function implies a distribution over functions.

Let us denote by \(K(X, X) \in M_{n}(\mathbb{R})\), \(K(X_*, X) \in M_{n_* \times n}(\mathbb{R})\) and \(K(X_*, X_*) \in M_{n_*}(\mathbb{R})\) the covariance matrices applies to \(x\) and \(x_*\).

import itertools

def compute_cov_matrices(x, x_star, sigma_f=1, l=1):

"""

Compute components of the covariance matrix of the joint distribution.

We follow the notation:

- K = K(X, X)

- K_star = K(X_*, X)

- K_star2 = K(X_*, X_*)

"""

n = x.shape[0]

n_star = x_star.shape[0]

K = [kernel_function(i, j, sigma_f=sigma_f, l=l) for (i, j) in itertools.product(x, x)]

K = np.array(K).reshape(n, n)

K_star2 = [kernel_function(i, j, sigma_f=sigma_f, l=l) for (i, j) in itertools.product(x_star, x_star)]

K_star2 = np.array(K_star2).reshape(n_star, n_star)

K_star = [kernel_function(i, j, sigma_f=sigma_f, l=l) for (i, j) in itertools.product(x_star, x)]

K_star = np.array(K_star).reshape(n_star, n)

return (K, K_star2, K_star)We compute the covariance matrices using the function above:

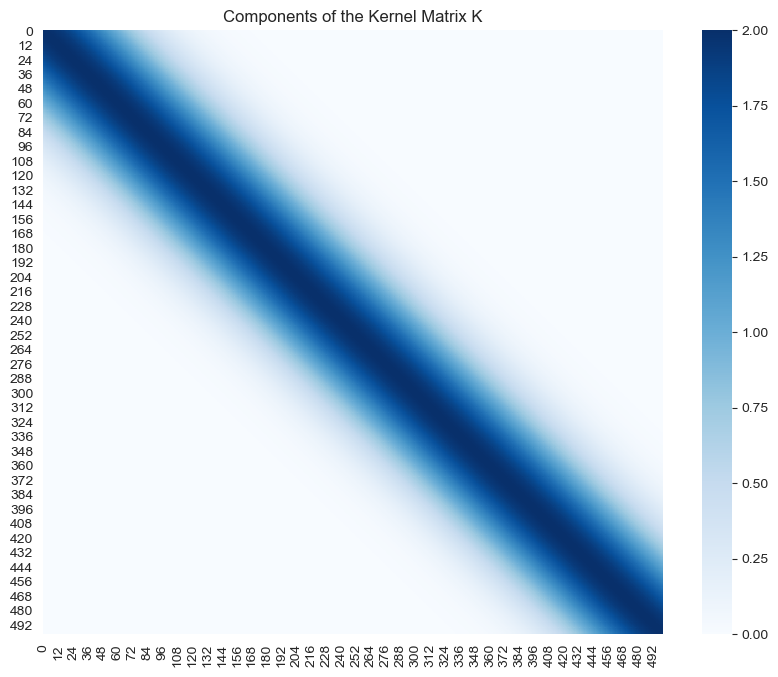

K, K_star2, K_star = compute_cov_matrices(x, x_star, sigma_f=sigma_f, l=l)We now visualize each component:

- \(K(X, X)\)

K.shape(500, 500)fig, ax = plt.subplots(figsize=(10, 8))

sns.heatmap(data=K, cmap='Blues', ax=ax)

ax.set(title='Components of the Kernel Matrix K');

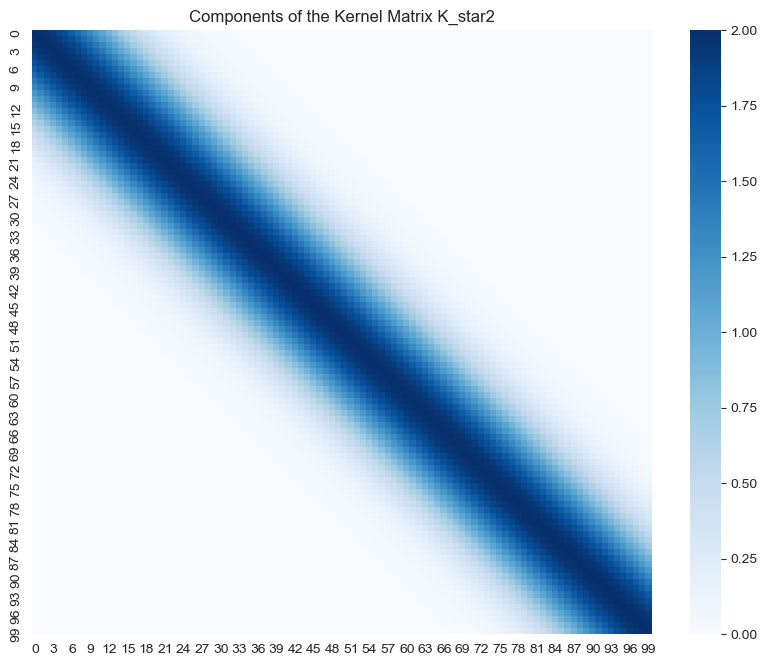

- \(K(X_*, X_*)\)

K_star2.shape(100, 100)fig, ax = plt.subplots(figsize=(10, 8))

sns.heatmap(data=K_star2, cmap='Blues', ax=ax)

ax.set(title='Components of the Kernel Matrix K_star2');

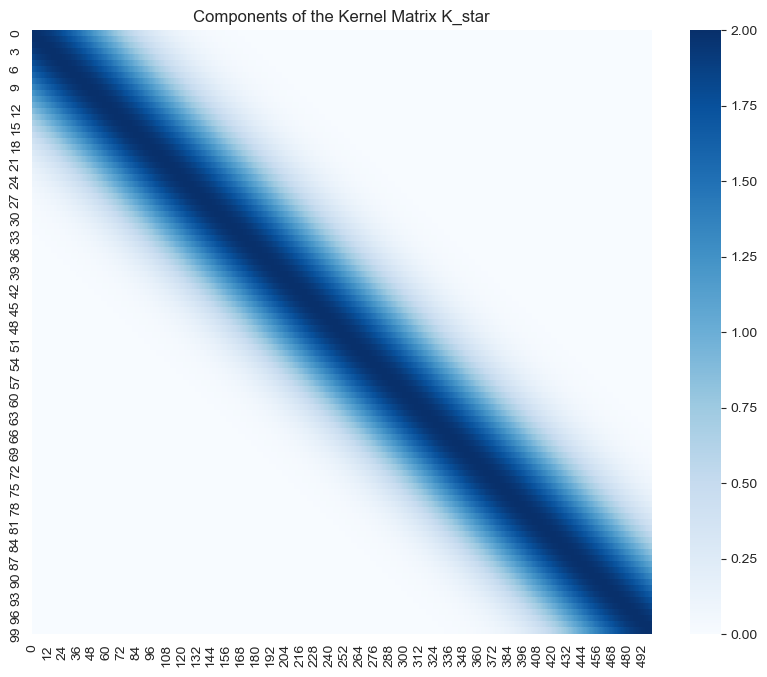

- \(K(X_*, X)\)

K_star.shape(100, 500)fig, ax = plt.subplots(figsize=(10, 8))

sns.heatmap(data=K_star, cmap='Blues', ax=ax)

ax.set(title='Components of the Kernel Matrix K_star');

Note how the highest values of the support of all these matrices is localized around the diagonal.

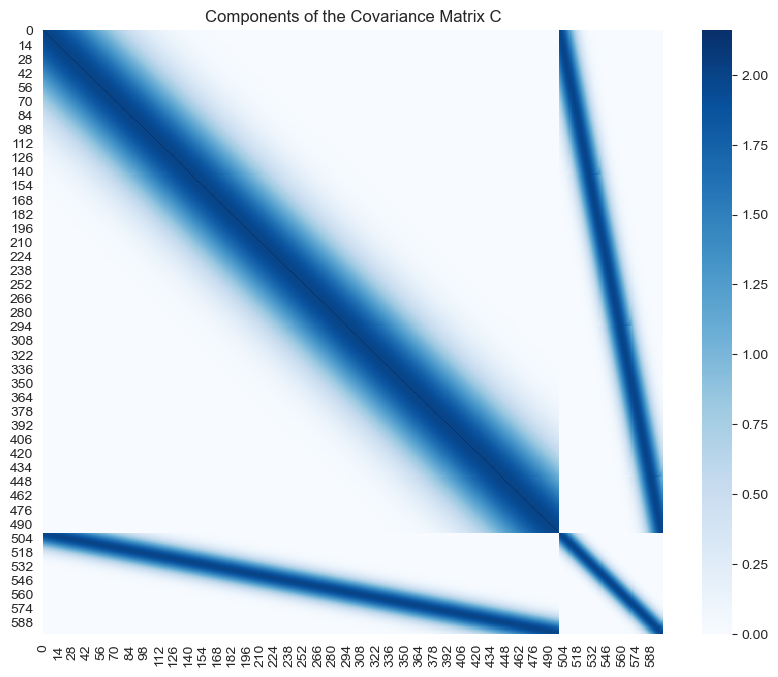

Joint Distribution

The joint distribution of \(y\) and \(f_*\) is given by

\[ \left( \begin{array}{c} y \\ f_* \end{array} \right) \sim N(0, C) \]

where

\[ C = \left( \begin{array}{cc} K(X, X) + \sigma^2_n I & K(X, X_*) \\ K(X_*, X) & K(X_*, X_*) \end{array} \right) \]

Observe that we need to add the term \(\sigma^2_n I\) to the upper left component to account for noise (assuming additive independent identically distributed Gaussian noise). We now compute the matrix \(C\).

a = np.concatenate((K + (sigma_n**2)*np.eye(n), K_star), axis=0)

a.shape(600, 500)b = np.concatenate((K_star.T, K_star2), axis=0)

b.shape(600, 100)C = np.concatenate((a, b), axis=1)

C.shape(600, 600)# Indeed,

C.shape == (n + n_star, n + n_star)TrueLet us verify that \(C\) is symmetric.

np.all(C.T == C)TrueWe can also see this visually:

fig, ax = plt.subplots(figsize=(10, 8))

sns.heatmap(data=C, cmap='Blues', ax=ax)

ax.set(title='Components of the Covariance Matrix C');

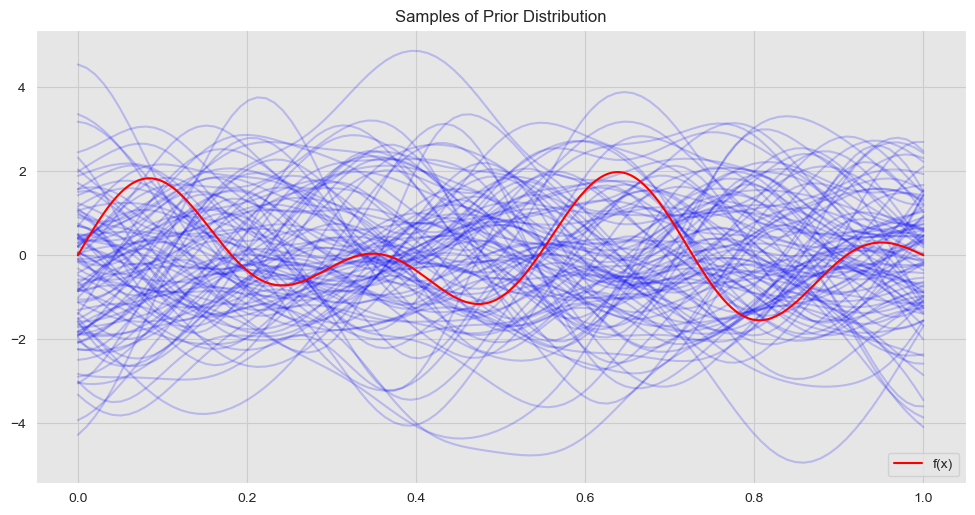

Prior Distribution

From the consistency requirement of gaussian processes we know that the prior distribution for \(f_*\) is \(N(0, K(X_*, X_*))\). Let us visualize some sample functions from this prior:

fig, ax = plt.subplots()

for i in range(0, 100):

# Sample from prior distribution.

z_star = np.random.multivariate_normal(mean=np.zeros(n_star), cov=K_star2)

# Plot function.

sns.lineplot(x=x_star, y=z_star, color='blue', alpha=0.2, ax=ax)

# Plot "true" linear fit.

sns.lineplot(x=x, y=f_x, color='red', label='f(x)', ax=ax)

ax.set(title='Samples of Prior Distribution')

ax.legend(loc='lower right');

Conditional Distribution

As described in our main reference, to get the posterior distribution over functions we need to restrict this joint prior distribution to contain only those functions which agree with the observed data points, that is, we are interested in computing \(f_*|X, y, X_*\). Using the results of Gaussian Processes for Machine Learning, Appendinx A.2, one can show that

\[ f_*|X, y, X_* \sim N(\bar{f}_*, \text{cov}(f_*)) \]

where

\[ \bar{f}_* = K(X_*, X)(K(X, X) + \sigma^2_n I)^{-1} y \in \mathbb{R}^{n_*} \]

and

\[ \text{cov}(f_*) = K(X_*, X_*) - K(X_*, X)(K(X, X) + \sigma^2_n I)^{-1} K(X, X_*) \in M_{n_*}(\mathbb{R}) \]

We now calculate the parameters of the posterior distribution:

def compute_gpr_parameters(K, K_star2, K_star, sigma_n):

"""Compute gaussian regression parameters."""

n = K.shape[0]

# Mean.

f_bar_star = np.dot(K_star, np.dot(np.linalg.inv(K + (sigma_n**2)*np.eye(n)), y.reshape([n, d])))

# Covariance.

cov_f_star = K_star2 - np.dot(K_star, np.dot(np.linalg.inv(K + (sigma_n**2)*np.eye(n)), K_star.T))

return (f_bar_star, cov_f_star)# Compute posterior mean and covariance.

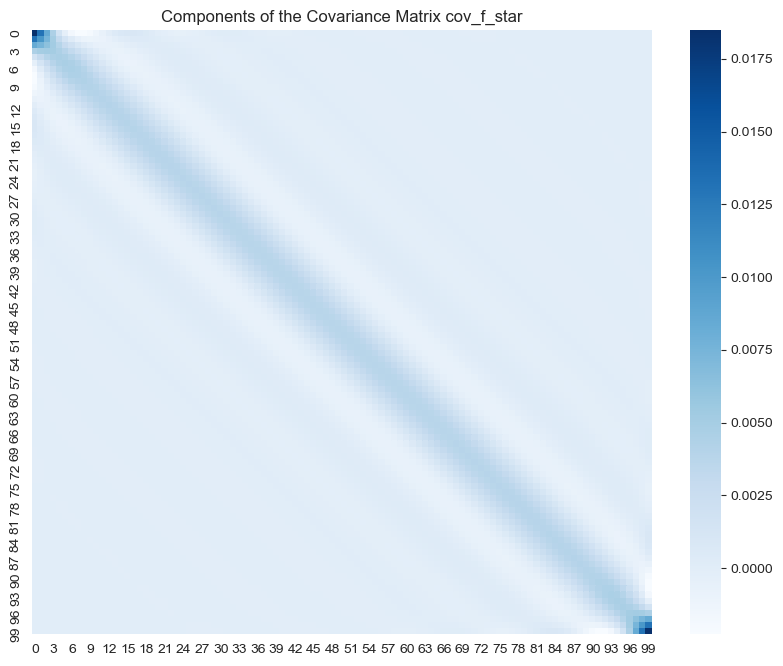

f_bar_star, cov_f_star = compute_gpr_parameters(K, K_star2, K_star, sigma_n)Let us visualize the covariance components.

fig, ax = plt.subplots(figsize=(10, 8))

sns.heatmap(data=cov_f_star, cmap='Blues', ax=ax)

ax.set_title('Components of the Covariance Matrix cov_f_star');

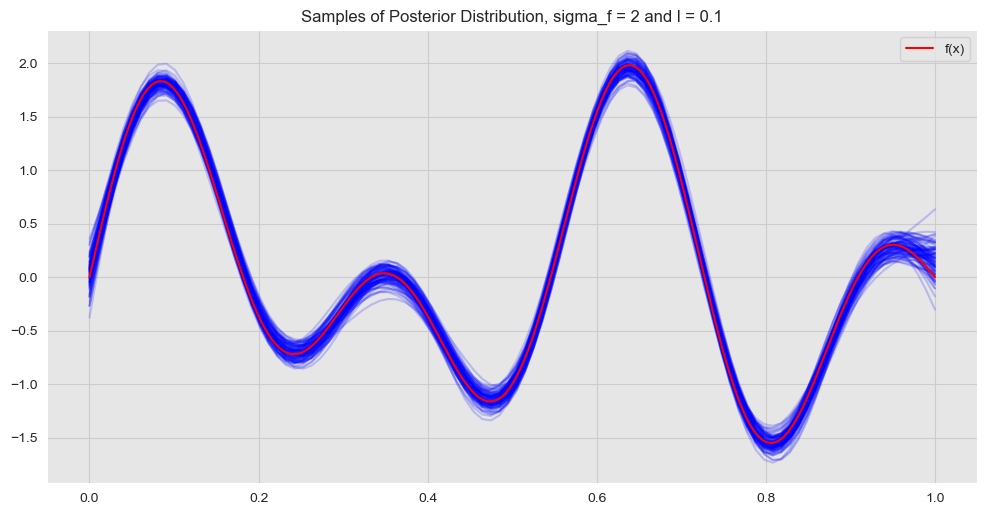

Let us now sample from the posterior distribution:

fig, ax = plt.subplots()

for i in range(0, 100):

# Sample from posterior distribution.

z_star = np.random.multivariate_normal(mean=f_bar_star.squeeze(), cov=cov_f_star)

# Plot function.

sns.lineplot(x=x_star, y=z_star, color="blue", alpha=0.2, ax=ax);

# Plot "true" linear fit.

sns.lineplot(x=x, y=f_x, color='red', label = 'f(x)', ax=ax)

ax.set(title=f'Samples of Posterior Distribution, sigma_f = {sigma_f} and l = {l}')

ax.legend(loc='upper right');

Hyperparameters

We now study the effect of the hyperparameters \(\sigma_f\) and \(\ell\) of the kernel function defined above.

The hyperparameter \(\sigma_f\) describes the amplitude of the function.

The hyperparameter \(\ell\) is a locality parameter, i.e. how far the points interact.

Let us see some concrete examples:

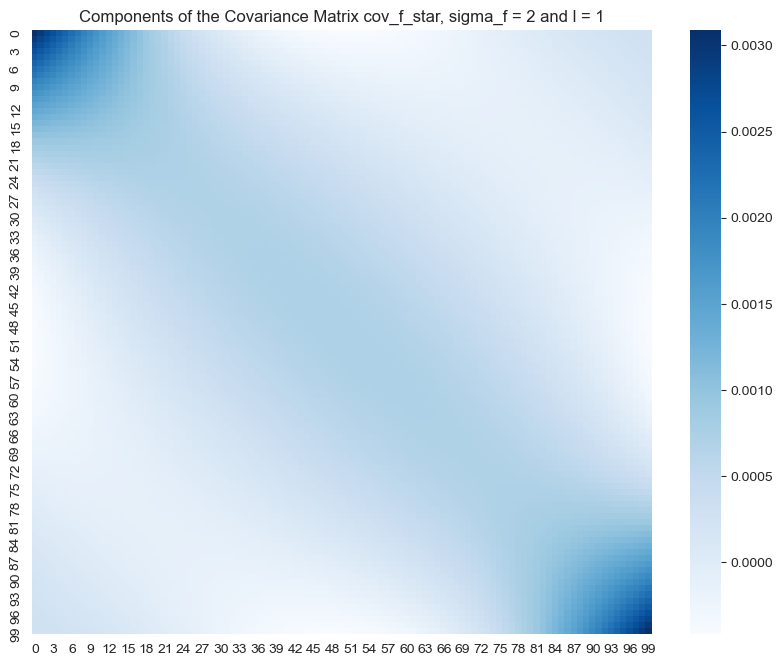

- \(\sigma_f = 2\) and \(\ell = 1\)

l = 1

sigma_f = 2

# Compute covariance matrices.

K, K_star2, K_star = compute_cov_matrices(x, x_star, sigma_f=sigma_f, l=l)

# Compute gaussian process parameters.

f_bar_star, cov_f_star = compute_gpr_parameters(K, K_star2, K_star, sigma_n)

# Plot posterior covariance matrix components.

fig, ax = plt.subplots(figsize=(10, 8))

sns.heatmap(data=cov_f_star, cmap='Blues', ax=ax)

ax.set(title=f'Components of the Covariance Matrix cov_f_star, sigma_f = {sigma_f} and l = {l}');

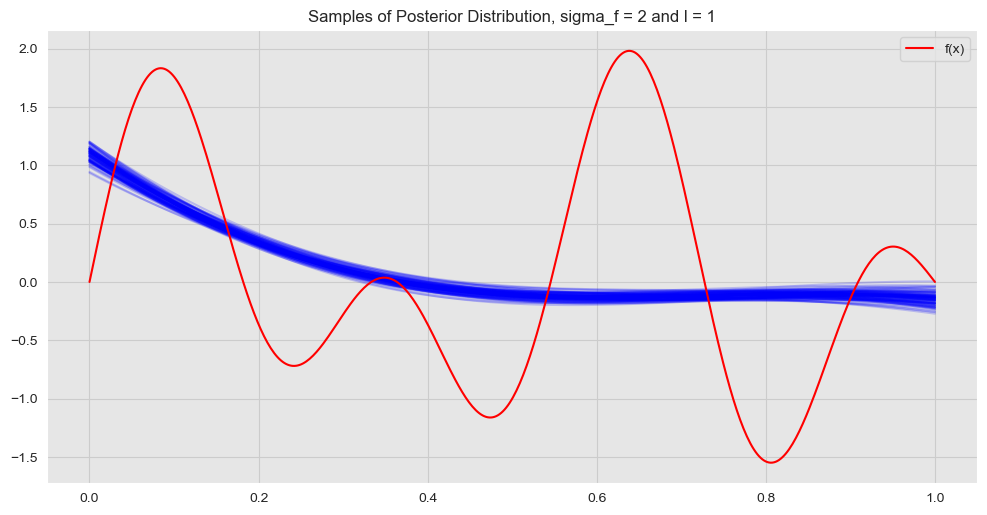

In this case the values of the posterior covariance matrix are not that localized. This means that we expect points far away can still have some interaction, i.e. the fit becomes more global. Let us plot the resulting fit:

K, K_star2, K_star = compute_cov_matrices(x, x_star, sigma_f=sigma_f, l=l)

f_bar_star, cov_f_star = compute_gpr_parameters(K, K_star2, K_star, sigma_n)

fig, ax = plt.subplots()

for i in range(0, 100):

# Sample from posterior distribution.

z_star = np.random.multivariate_normal(mean=f_bar_star.squeeze(), cov=cov_f_star)

# Plot function.

sns.lineplot(x=x_star, y=z_star, color='blue', alpha=0.2, ax=ax);

# Plot "true" linear fit.

sns.lineplot(x=x, y=f_x, color='red', label='f(x)', ax=ax)

ax.set(title=f'Samples of Posterior Distribution, sigma_f = {sigma_f} and l = {l}')

ax.legend(loc='upper right');

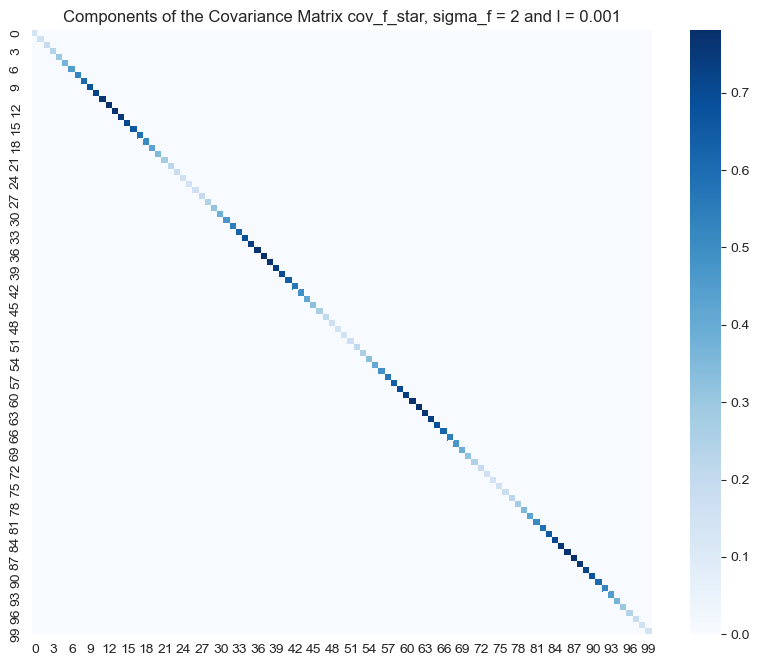

- \(\sigma_f = 2\) and \(\ell = 0.001\)

l = 0.001

sigma_f = 2

# Compute covariance matrices.

K, K_star2, K_star = compute_cov_matrices(x, x_star, sigma_f=sigma_f, l=l)

# Compute gaussian process parameters.

f_bar_star, cov_f_star = compute_gpr_parameters(K, K_star2, K_star, sigma_n)

# Plot posterior covariance matrix components.

fig, ax = plt.subplots(figsize=(10, 8))

sns.heatmap(data=cov_f_star, cmap='Blues', ax=ax)

ax.set(title=f'Components of the Covariance Matrix cov_f_star, sigma_f = {sigma_f} and l = {l}');

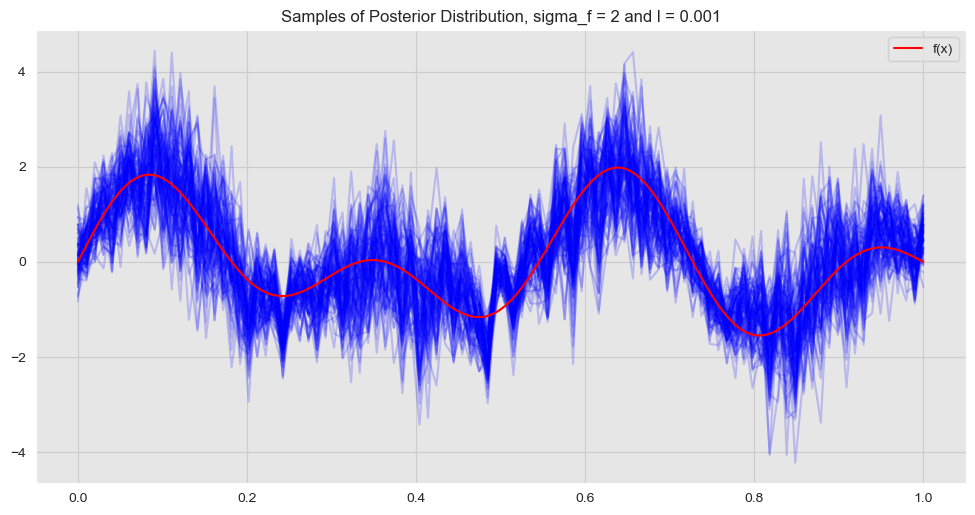

In contrast, we see that for these set of hyper parameters the higher values of the posterior covariance matrix are concentrated along the diagonal. This means that we expect points far away to have no effect on each other, i.e. the fit becomes more local. Let us plot the resulting fit:

fig, ax = plt.subplots()

for i in range(0, 100):

# Sample from posterior distribution.

z_star = np.random.multivariate_normal(mean=f_bar_star.squeeze(), cov=cov_f_star)

# Plot function.

sns.lineplot(x=x_star, y=z_star, color='blue', alpha=0.2, ax=ax);

# Plot "true" linear fit.

sns.lineplot(x=x, y=f_x, color='red', label='f(x)', ax=ax)

ax.set(title=f'Samples of Posterior Distribution, sigma_f = {sigma_f} and l = {l}')

ax.legend(loc='upper right');

Hence, we see that the hyperparameter \(\ell\) somehow encodes the “complexity” and “locality” of the model.

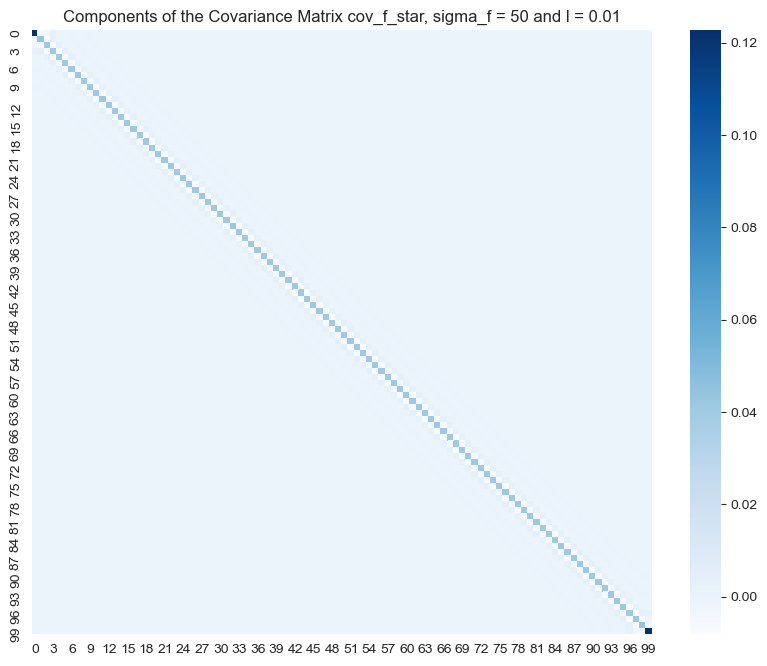

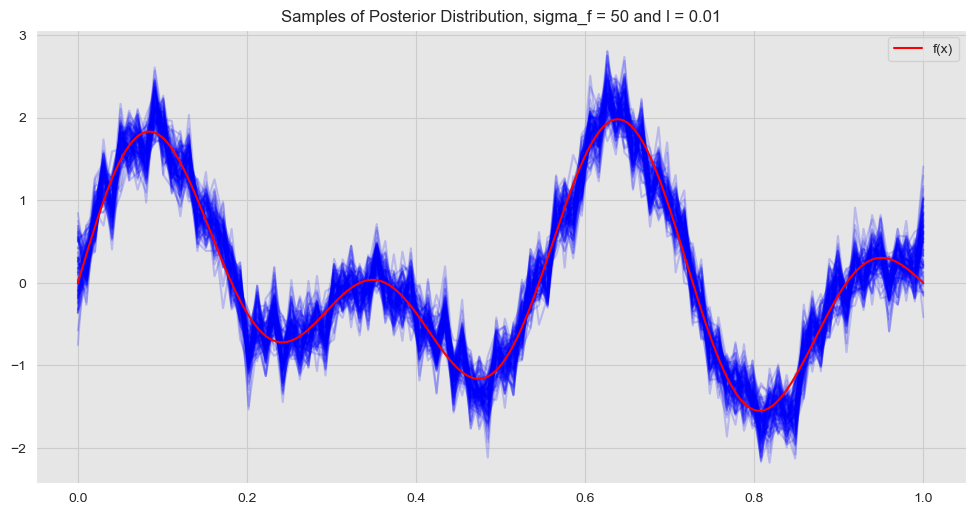

- \(\sigma_f = 25\) and \(\ell = 0.01\)

l = 0.01

sigma_f = 50

# Compute covariance matrices.

K, K_star2, K_star = compute_cov_matrices(x, x_star, sigma_f=sigma_f, l=l)

# Compute gaussian process parameters.

f_bar_star, cov_f_star = compute_gpr_parameters(K, K_star2, K_star, sigma_n)

# Plot posterior covariance matrix components.

fig, ax = plt.subplots(figsize=(10, 8))

sns.heatmap(data=cov_f_star, cmap='Blues', ax=ax)

ax.set(title=f'Components of the Covariance Matrix cov_f_star, sigma_f = {sigma_f} and l = {l}');

fig, ax = plt.subplots()

for i in range(0, 100):

# Sample from posterior distribution.

z_star = np.random.multivariate_normal(mean=f_bar_star.squeeze(), cov=cov_f_star)

# Plot function.

sns.lineplot(x=x_star, y=z_star, color='blue', alpha=0.2, ax=ax);

# Plot "true" linear fit.

sns.lineplot(x=x, y=f_x, color='red', label = 'f(x)', ax=ax);

ax.set(title=f'Samples of Posterior Distribution, sigma_f = {sigma_f} and l = {l}')

ax.legend(loc='upper right');

The hyperparameter \(\sigma_f\) enoces the amplitude of the fit.

GaussianProcessRegressor from Scikit-Learn

Note that in the examples above he had to compute the inverse of \(K(X,X) + \sigma_n^2 I\), which can be computationally expensive for larger data sets. A better approach is to use the Cholesky decomposition of \(K(X,X) + \sigma_n^2 I\) as described in Gaussian Processes for Machine Learning, Ch 2 Algorithm 2.1. This is actually the implementation used by Scikit-Learn. We now describe how to fit a GaussianProcessRegressor model using Scikit-Learn and compare it with the results obtained above. Please refer to the docomentation example to get more detailed information.

Kernel Object

There are my kernel functions implemented in Scikit-Learn. Let us see how define the squared exponential:

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import ConstantKernel, RBF

# Define kernel parameters.

l = 0.1

sigma_f = 2

# Define kernel object.

kernel = ConstantKernel(constant_value=sigma_f,constant_value_bounds=(1e-3, 1e3)) \

* RBF(length_scale=l, length_scale_bounds=(1e-3, 1e3))The tuples on each kernel component represent the lower and upper bound of the hyperparameters. The gaussian process fit automatically selects the best hyperparameters which maximize the log-marginal likelihood. The marginal likelihood is the integral of the likelihood times the prior.

GaussianProcessRegressor

Now we define de GaussianProcessRegressor object.

from sklearn.gaussian_process import GaussianProcessRegressor

# Define GaussianProcessRegressor object.

gp = GaussianProcessRegressor(kernel=kernel, alpha=sigma_n**2, n_restarts_optimizer=10)We reshape the variables into matrix form.

X = x.reshape(n, d)

X_star = x_star.reshape(n_star, d)# Fit to data using Maximum Likelihood Estimation of the parameters.

gp.fit(X, y)GaussianProcessRegressor(alpha=0.16000000000000003, copy_X_train=True,

kernel=1.41**2 * RBF(length_scale=0.1),

n_restarts_optimizer=10, normalize_y=False,

optimizer='fmin_l_bfgs_b', random_state=None)Predictions

# Make the prediction on test set.

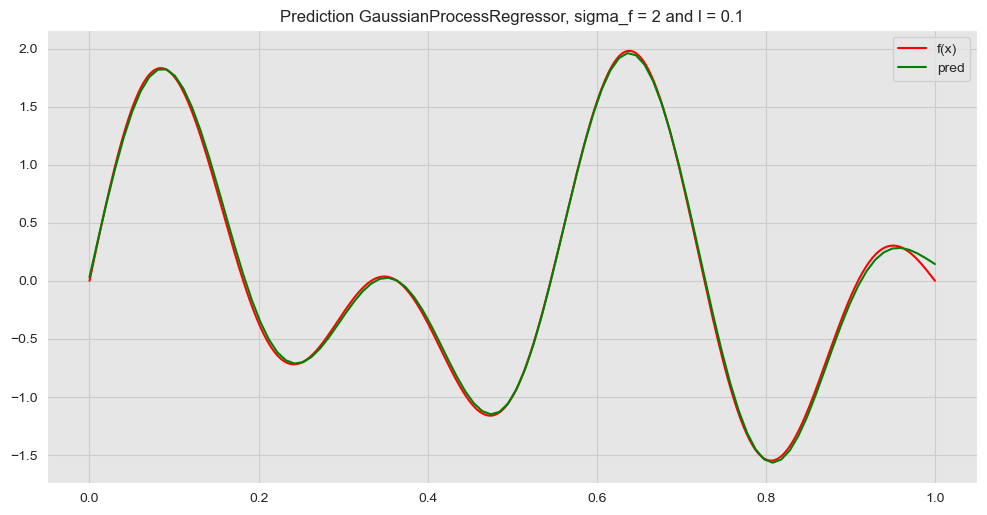

y_pred = gp.predict(X_star)Let us plot the prediction.

fig, ax = plt.subplots()

# Plot "true" linear fit.

sns.lineplot(x=x, y=f_x, color='red', label='f(x)', ax=ax)

# Plot prediction.

sns.lineplot(x=x_star, y=y_pred, color='green', label='pred', ax=ax)

ax.set(title=f'Prediction GaussianProcessRegressor, sigma_f = {sigma_f} and l = {l}')

ax.legend(loc='upper right');

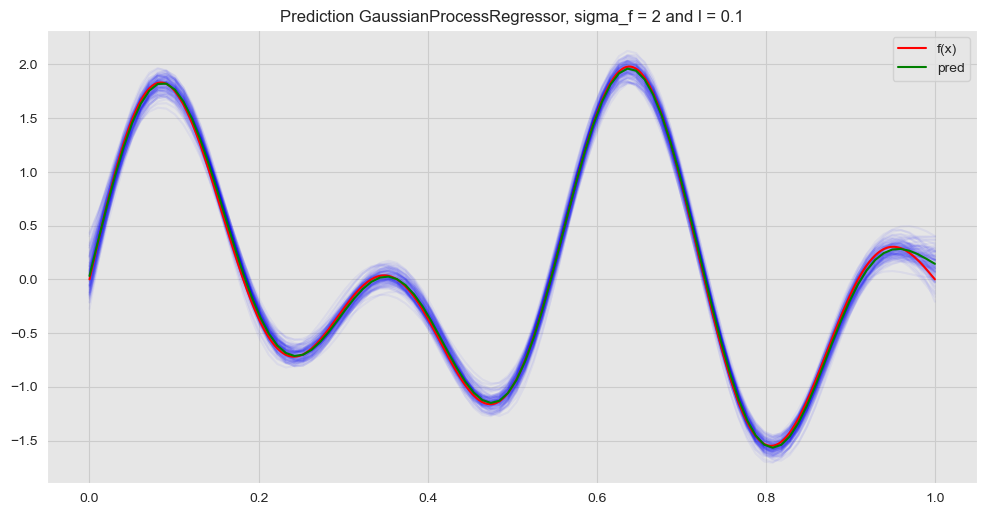

Next, we plot this prediction against many samples from the posterior distribution obtained above.

K, K_star2, K_star = compute_cov_matrices(x, x_star, sigma_f = sigma_f, l = l)

f_bar_star, cov_f_star = compute_gpr_parameters(K, K_star2, K_star, sigma_n)

fig, ax = plt.subplots()

for i in range(0, 100):

# Sample from posterior distribution.

z_star = np.random.multivariate_normal(mean=f_bar_star.squeeze(), cov=cov_f_star)

# Plot function.

sns.lineplot(x=x_star, y=z_star, color='blue', alpha=0.05, ax=ax);

# Plot "true" linear fit.

sns.lineplot(x=x, y=f_x, color='red', label='f(x)', ax=ax);

# Plot prediction.

sns.lineplot(x=x_star, y=y_pred, color='green', label='pred', ax=ax);

ax.set(title=f'Prediction GaussianProcessRegressor, sigma_f = {sigma_f} and l = {l}')

ax.legend(loc='upper right');

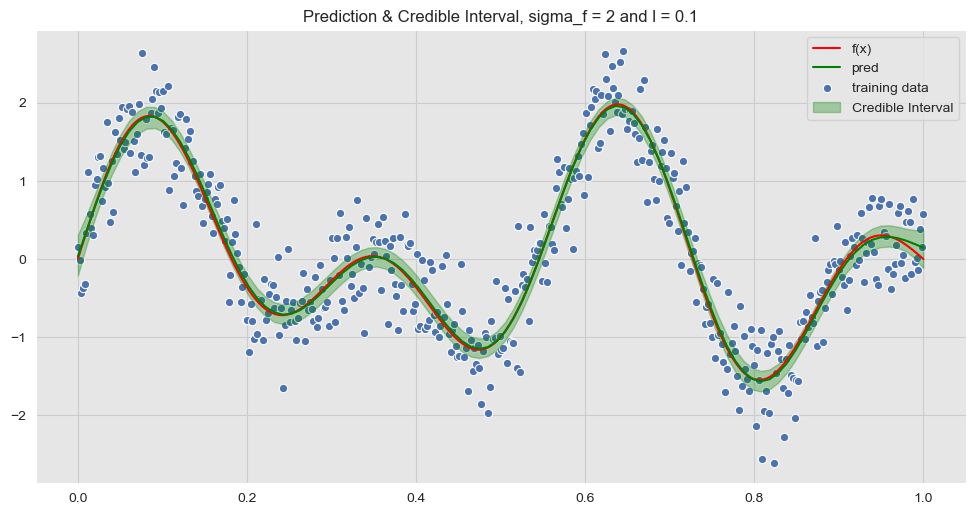

Credible Interval Interval

Let us compute the credible intervals:

# Generate samples from posterior distribution.

y_hat_samples = np.random.multivariate_normal(mean=f_bar_star.squeeze(), cov=cov_f_star, size=100)

# Compute the mean of the sample.

y_hat = np.apply_over_axes(func=np.mean, a=y_hat_samples, axes=0).squeeze()

# Compute the standard deviation of the sample.

y_hat_sd = np.apply_over_axes(func=np.std, a=y_hat_samples, axes=0).squeeze()We now plot the confidence interval corresponding to a corridor associated with two standard deviations.

fig, ax = plt.subplots()

# Plot training data.

sns.scatterplot(x=x, y=y, label='training data', ax=ax)

# Plot corridor.

ax.fill_between(

x=x_star,

y1=(y_hat - 2*y_hat_sd),

y2=(y_hat + 2*y_hat_sd),

color='green',

alpha = 0.3,

label='Credible Interval'

)

# Plot "true" linear fit.

sns.lineplot(x=x, y=f_x, color='red', label='f(x)', ax=ax);

# Plot prediction.

sns.lineplot(x=x_star, y=y_pred, color='green', label='pred', ax=ax)

ax.set_title('Prediction & Credible Interval, sigma_f = {} and l = {}'.format(sigma_f, l))

ax.legend(loc='upper right');

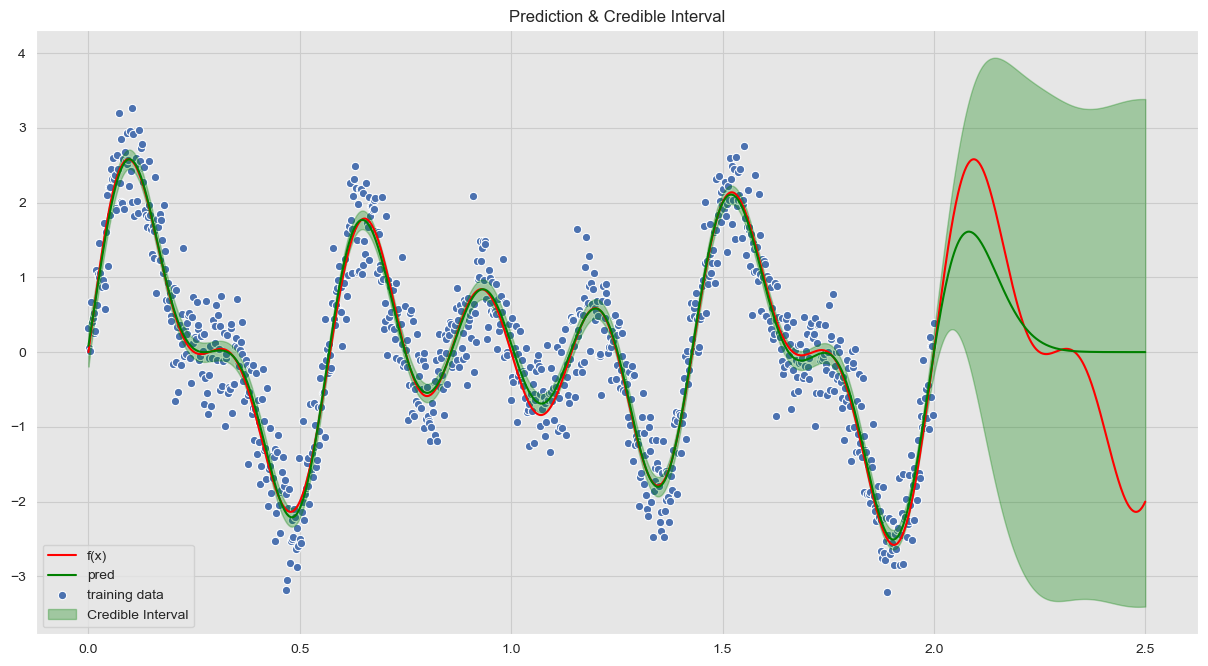

Summary & Final Example

In this post we have studied and experimented the fundamentals of gaussian process regression with the intention to gain some intuition about it. Let us finalize with a self-contain example where we only use the tools from Scikit-Learn.

Construct Training Set

# Set dimension.

d = 1

# Number of training points.

n = 1000

# Length of the training set.

L = 2

# Generate training features.

x = np.linspace(start=0, stop=L, num=n)

X = x.reshape(n, d)

# Error standar deviation.

sigma_n = 0.4

# Errors.

epsilon = np.random.normal(loc=0, scale=sigma_n, size=n)

# Generate non-linear function.

def f(x):

f = np.sin((4*np.pi)*x) + np.sin((7*np.pi)*x) + np.sin((3*np.pi)*x)

return(f)

f_x = f(x)

# Observed target variable.

y = f_x + epsilonConstruct Test Set

n_star = n + 300

x_star = np.linspace(start=0, stop=(L + 0.5), num=n_star)

X_star = x_star.reshape(n_star, d)Define Kernel and Model

# Define kernel parameters.

l = 0.1

sigma_f = 2

# Define kernel object.

kernel = ConstantKernel(constant_value=sigma_f, constant_value_bounds=(1e-2, 1e2)) \

* RBF(length_scale=l, length_scale_bounds=(1e-2, 1e2))

# Define GaussianProcessRegressor object.

gp = GaussianProcessRegressor(kernel=kernel, alpha=sigma_n**2, n_restarts_optimizer=10, )Model Fit + Predictions

# Fit to data using Maximum Likelihood Estimation of the parameters.

gp.fit(X, y)

# Make the prediction on test set.

y_pred = gp.predict(X_star)# Generate samples from posterior distribution.

y_hat_samples = gp.sample_y(X_star, n_samples=n_star)

# Compute the mean of the sample.

y_hat = np.apply_over_axes(func=np.mean, a=y_hat_samples, axes=1).squeeze()

# Compute the standard deviation of the sample.

y_hat_sd = np.apply_over_axes(func=np.std, a=y_hat_samples, axes=1).squeeze()Visualize Results

fig, ax = plt.subplots(figsize=(15, 8))

# Plot training data.

sns.scatterplot(x=x, y=y, label='training data', ax=ax);

# Plot "true" linear fit.

sns.lineplot(

x=x_star,

y=f(x_star),

color='red',

label='f(x)',

ax=ax

)

# Plot corridor.

ax.fill_between(

x=x_star,

y1=(y_hat - 2*y_hat_sd),

y2=(y_hat + 2*y_hat_sd),

color='green',

alpha=0.3,

label='Credible Interval'

)

# Plot prediction.

sns.lineplot(x=x_star, y=y_pred, color='green', label='pred')

ax.set(title='Prediction & Credible Interval')

ax.legend(loc='lower left');

This is just the the beginning. There are many questions which are still open:

- How does the hyperparameter selection works?

- Which other kernels are possible?

- How to generate new kernels? Can we combine kernels to get new ones?

- How to apply these techniques to classification problems?

I hope to keep exploring these and more questions in future posts.

Acknowledgments: Thank you to Fox Weng for pointing out a typo in one of the formulas presented in a previous version of the post.