In this post I show some text generation experiments I ran using LSTM with Keras. For the preprocessing and tokenization I used SpaCy. The aim is not to present a completed project, but rather a first step which should be then iterated.

Resources

There are many great resources and blog posts about the subject (and similar experiments). Here I mention the ones I found particularly useful for the general theory:

- Online Resources:

Remark: From this last course I took most of the code in this experiment (check out the complete series of videos!).

Books:

The Data Set

Source: Kaggle.

Description: Plot summary descriptions scraped from Wikipedia.

Our aim is to train a text generator algorithm able to write plots for horror movies (why horror? no particular reason).

Read Data

import numpy as np

import pandas as pd# Read data.

movies_raw_df = pd.read_csv('wiki_movie_plots_deduped.csv')

movies_raw_df.head()| Release Year | Title | Origin/Ethnicity | Director | Cast | Genre | Wiki Page | Plot | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1901 | Kansas Saloon Smashers | American | Unknown | NaN | unknown | https://en.wikipedia.org/wiki/Kansas_Saloon_Sm… | A bartender is working at a saloon, serving dr… |

| 1 | 1901 | Love by the Light of the Moon | American | Unknown | NaN | unknown | https://en.wikipedia.org/wiki/Love_by_the_Ligh… | The moon, painted with a smiling face hangs ov… |

| 2 | 1901 | The Martyred Presidents | American | Unknown | NaN | unknown | https://en.wikipedia.org/wiki/The_Martyred_Pre… | The film, just over a minute long, is composed… |

| 3 | 1901 | Terrible Teddy, the Grizzly King | American | Unknown | NaN | unknown | https://en.wikipedia.org/wiki/Terrible_Teddy,_… | Lasting just 61 seconds and consisting of two … |

| 4 | 1902 | Jack and the Beanstalk | American | George S. Fleming, Edwin S. Porter | NaN | unknown | https://en.wikipedia.org/wiki/Jack_and_the_Bea… | The earliest known adaptation of the classic f… |

Filter Data

movies_to_select = ((movies_raw_df['Genre'] == 'horror') &

# Restrict to American movies.

(movies_raw_df['Origin/Ethnicity'] == 'American') &

# Only movies from 2000.

(movies_raw_df['Release Year'] > 1999))The last two conditions are just to make the data set smaller (as this is just an experiment).

horror_df = movies_raw_df[movies_to_select]['Plot']

horror_df.head()13617 In November 1999, tourists and fans of The Bla...

13640 Matthew Van Helsing, the alleged descendant of...

13681 A small group of fervent Roman Catholics belie...

13731 Cotton Weary, now living in Los Angeles and th...

13763 Amy Mayfield, a student at a prestigious film ...

Name: Plot, dtype: objecthorror_df.shape(260,)Preprocess Data

We are going to use SpaCy for the tokenization.

# Join all plots into a string.

horror_str = horror_df.str.cat(sep=' ')import spacy

# Load language model.

nlp = spacy.load('en', disable = ['parser', 'tagger', 'ner'])If the data set is big, it might be necessary to increase nlp.max_length.

We write a function to extract the tokens (words).

def get_tokens(doc_text):

# This pattern is a modification of the defaul filter from the

# Tokenizer() object in keras.preprocessing.text.

# It just indicates which patters no skip.

skip_pattern = '\r\n \n\n \n\n\n!"-#$%&()--.*+,-./:;<=>?@[\\]^_`{|}~\t\n\r '

tokens = [token.text.lower() for token in nlp(doc_text) if token.text not in skip_pattern]

return tokens# Get tokens.

tokens = get_tokens(horror_str)# Let us see the first tokens.

tokens[0:9]['in', 'november', '1999', 'tourists', 'and', 'fans', 'of', 'the', 'blair']# Compute the number of tokens list.

len(tokens) 165870Feature Extraction

The idea to construct the feature matrix for the model is to generate sequences of words of length len_0 + 1, where the first len_0 words define the features and the last word the target. That is, with a sequence of words of length len_0 we predict the next word. The model is then set as a multi-class classification problem.

For this use case, we are going to set len_0 = 25.

For example, the first observation is:

len_0 = 25

tokens[0:len_0]['in',

'november',

'1999',

'tourists',

'and',

'fans',

'of',

'the',

'blair',

'witch',

'project',

'descend',

'on',

'the',

'small',

'town',

'of',

'burkittsville',

'maryland',

'where',

'the',

'film',

'is',

'set',

'local']with target:

tokens[len_0:len_0 + 1]['resident']We now generate the sequences:

train_len = len_0 + 1

text_sequences = []

for i in range(train_len, len(tokens)):

# Construct sequence.

seq = tokens[i - train_len: i]

# Append.

text_sequences.append(seq)For instance, the first sequence is:

' '.join(text_sequences[0])in november 1999 tourists and fans of the blair witch project descend on the small town of burkittsville maryland where the film is set local resident

len(text_sequences[0])26Let us see the first five sequences:

for i in range(0, 5):

print(' '.join(text_sequences[i]))

print('-----')in november 1999 tourists and fans of the blair witch project descend on the small town of burkittsville maryland where the film is set local resident

-----

november 1999 tourists and fans of the blair witch project descend on the small town of burkittsville maryland where the film is set local resident jeff

-----

1999 tourists and fans of the blair witch project descend on the small town of burkittsville maryland where the film is set local resident jeff a

-----

tourists and fans of the blair witch project descend on the small town of burkittsville maryland where the film is set local resident jeff a former

-----

and fans of the blair witch project descend on the small town of burkittsville maryland where the film is set local resident jeff a former psychiatric

-----Vectorization

The next step is to encode these character sequences as numerical features. We do this using the Tokenizer object from Keras.

from keras.preprocessing.text import Tokenizer

tokenizer = Tokenizer()

tokenizer.fit_on_texts(text_sequences)# Get numeric sequences.

sequences = tokenizer.texts_to_sequences(text_sequences)For example, the first (numerical) sequence is

sequences[0][8,

12586,

12585,

2397,

2,

12584,

5,

1,

5558,

630,

2195,

2927,

20,

1,

449,

157,

5,

12583,

7487,

42,

1,

117,

7,

362,

231,

2928]Let us verify the first word of the sequence is indeed “in”.

tokenizer.index_word[8]'in'Let us save the vocabulary size = # unique tokens.

vocabulary_size = len(tokenizer.word_counts)

vocabulary_size12586# We store the sequences in a numpy array.

sequences = np.array(sequences)sequencesarray([[ 8, 12586, 12585, ..., 362, 231, 2928],

[12586, 12585, 2397, ..., 231, 2928, 297],

[12585, 2397, 2, ..., 2928, 297, 4],

...,

[ 20, 4, 1551, ..., 1, 59, 5],

[ 4, 1551, 1684, ..., 59, 5, 6],

[ 1551, 1684, 22, ..., 5, 6, 169]])X - y Split

We now construct the observation matrix X and the label vector y.

from keras.utils import to_categorical

# select all but last word indices.

X = sequences[:, :-1]

Xarray([[ 8, 12586, 12585, ..., 7, 362, 231],

[12586, 12585, 2397, ..., 362, 231, 2928],

[12585, 2397, 2, ..., 231, 2928, 297],

...,

[ 20, 4, 1551, ..., 22, 1, 59],

[ 4, 1551, 1684, ..., 1, 59, 5],

[ 1551, 1684, 22, ..., 59, 5, 6]])X.shape(165844, 25)seq_len = X.shape[1]# select all last word indices.

y = sequences[:, -1]

yarray([2928, 297, 4, ..., 5, 6, 169])# Convert to categorical (we add + 1 because Keras needs a placeholder).

y = to_categorical(y, num_classes=(vocabulary_size + 1))

yarray([[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]], dtype=float32)Model Definition

Next, we define the (Sequential) network architecture:

- Embedding layer.

- Two LSTM layers.

- One Dense layer with

reluactivation function. - One final Dense layer with

softmaxactivation function to output class probabilities.

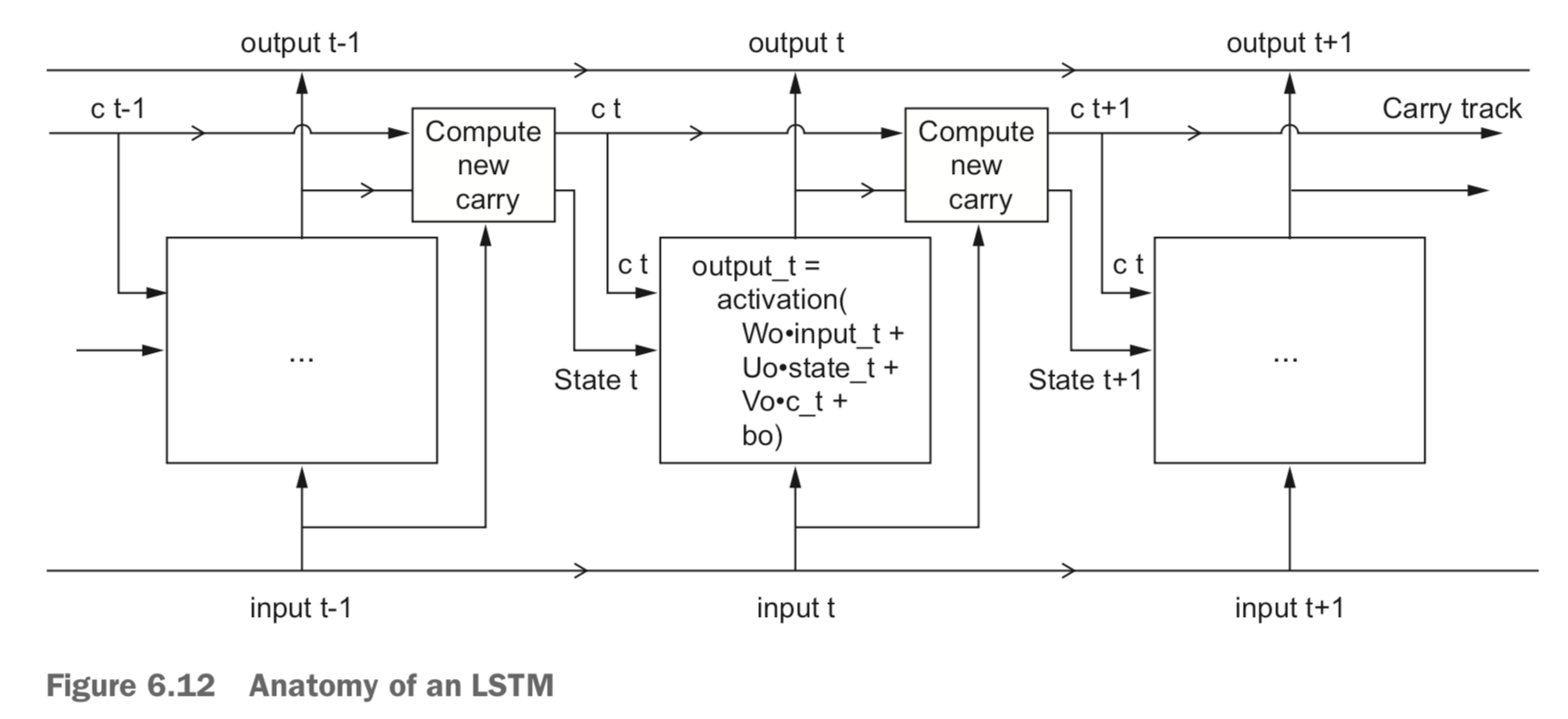

As a reminder, here is a schema of an LSTM layer:

Image Source: Deep Learning with R, page 188.

For the optimization:

- loss =‘categorical_crossentropy’

- optimizer = ‘adam’,

- metrics = [‘accuracy’]

from keras.models import Sequential

from keras.layers import Dense, LSTM, Embedding

def create_model(vocabulary_size, seq_len):

model = Sequential()

model.add(Embedding(input_dim=vocabulary_size,

output_dim=seq_len,

input_length=seq_len))

model.add(LSTM(units=50, return_sequences=True))

model.add(LSTM(units=50))

model.add(Dense(units=50, activation='relu'))

model.add(Dense(units=vocabulary_size, activation='softmax'))

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

model.summary()

return model# Let us create the model and see summary.

model = create_model(vocabulary_size=(vocabulary_size + 1), seq_len=seq_len) _________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding_1 (Embedding) (None, 25, 25) 314675

_________________________________________________________________

lstm_1 (LSTM) (None, 25, 50) 15200

_________________________________________________________________

lstm_2 (LSTM) (None, 50) 20200

_________________________________________________________________

dense_1 (Dense) (None, 50) 2550

_________________________________________________________________

dense_2 (Dense) (None, 12587) 641937

=================================================================

Total params: 994,562

Trainable params: 994,562

Non-trainable params: 0

_________________________________________________________________Model Fit

I fit the model in my local machine. With a batch_size of \(128\) it took \(700\) epochs to get an accuracy of around \(0.5\) (which is ok as our aim is not correcly classify all sequences) and it took around \(8\) hours.

model.fit(x=X, y=y, batch_size=128, epochs=700, verbose=1)# Get model metrics.

loss, accuracy = model.evaluate(x=X, y=y)print(f'Loss: {loss}\nAccuracy: {accuracy}')Loss: 2.388542058993397

Accuracy: 0.4952485468285764Save Model

First we save the tokenizer.

from pickle import dump

dump(tokenizer, open('tokenizer', 'wb'))Next we save the model.

model.save('model.h5')We can reload the model as:

from keras.models import load_model

model = load_model('model.h5')Generate New Text

Now we can use the model to generate new word sequences:

from keras.preprocessing.sequence import pad_sequences

def generate_text(model, tokenizer, seq_len, seed_text, num_gen_words):

# List to store the generated words.

output_text = []

# Set seed_text as input_text.

input_text = seed_text

for i in range(num_gen_words):

# Encode input text.

encoded_text = tokenizer.texts_to_sequences([input_text])[0]

# Add if the input tesxt does not have length len_0.

pad_encoded = pad_sequences([encoded_text], maxlen=seq_len, truncating='pre')

# Do the prediction. Here we automatically choose the word with highest probability.

pred_word_ind = model.predict_classes(pad_encoded, verbose=0)[0]

# Convert from numeric to word.

pred_word = tokenizer.index_word[pred_word_ind]

# Attach predicted word.

input_text += ' ' + pred_word

# Append new word to the list.

output_text.append(pred_word)

return ' '.join(output_text)Let us see how this works in practice.

Example 1

Select a subset of out training set.

sample_text = horror_df.iloc[100][:383]

print(sample_text)Officer Frank Williams (Steven Vidler) and his partner Blaine investigate an abandoned house, where they find a young woman with her eyes ripped out. A large figure with an axe then murders Blaine and Frank has his arm chopped off before he is able to shoot the attacker in the head. Afterwards, detectives find seven bodies in the house, all of which have had their eyes ripped out.

Set seed_text to be the start of the sample test.

seed_text = sample_text[:190]

print(seed_text)Officer Frank Williams (Steven Vidler) and his partner Blaine investigate an abandoned house, where they find a young woman with her eyes ripped out. A large figure with an axe then murders

Generate text:

generated_text = generate_text(model=model,

tokenizer=tokenizer,

seq_len=seq_len,

seed_text=seed_text,

num_gen_words=40)

print(seed_text + ' ' + generated_text + '...')Officer Frank Williams (Steven Vidler) and his partner Blaine investigate an abandoned house, where they find a young woman with her eyes ripped out. A large figure with an axe then murders blaine and cyrus is a sapling jeff reveals that he is the group of the family ’s house in the process to lure arthur flee in the house and goes into the morning and hyenas is taken to the house…

Example 2

Let us give a “horror-like” seed text.

seed_text = 'the film starts in a dark house where a group of teenagers friends meet to spend the weekend when they suddenly hear'generated_text = generate_text(model=model,

tokenizer=tokenizer,

seq_len=seq_len,

seed_text=seed_text,

num_gen_words=80)

print(seed_text + ' ' + generated_text + '...')the film starts in a dark house where a group of teenagers friends meet to spend the weekend when they suddenly hear voices he confronts john ’s mother and lina pull themselves into the morning because the group split her face towards the scene and attempts to shoot the supervision of the truth jessabelle ’s throat with regular injections of them and remains by itself blocks nazi isolating youths ’s grave and tortures him are inferior in coffins and a group of the entire bat acting in los angeles with the ghosts of the same time and lures her drugs in 1408…

Example 3

Now let us start with a “comedy-type” seed text.

seed_text = movies_raw_df[movies_raw_df['Genre'] == 'comedy']['Plot'].iloc[330]

print(seed_text)Cocky college football star Francis Finnegan has his eye on the attractive Gloria van Dayham, as does his rival, Larry Stacey. Francis gets a job in a department store owned by Stacey’s father, where salesgirl June Cort develops an attraction to him. Finnegan proposes that Stacey’s store sponsor a football team, which causes rival shop owner Whimple to do likewise. The team’s head cheerleader, Mimi, falls for team mascot Joe, meanwhile, and everybody pairs off with the perfect partner after the big game.

generated_text = generate_text(model=model,

tokenizer=tokenizer,

seq_len=seq_len,

seed_text=seed_text,

num_gen_words=90)

print(seed_text + ' ' + generated_text + '...')Cocky college football star Francis Finnegan has his eye on the attractive Gloria van Dayham, as does his rival, Larry Stacey. Francis gets a job in a department store owned by Stacey’s father, where salesgirl June Cort develops an attraction to him. Finnegan proposes that Stacey’s store sponsor a football team, which causes rival shop owner Whimple to do likewise. The team’s head cheerleader, Mimi, falls for team mascot Joe, meanwhile, and everybody pairs off with the perfect partner after the big game. and kills ziko is eaten but jenna learn of thrill village on a punk couple of the house and puts her to the island and erin are instructed to get beside chaos ambrosia might can trust him he gone to be and those refusing to wake that he is the ancient wooden zombie afloat in mexico and awakens in the woods and enters the road into fright she inspects iris ’s corpse which is stripped and raped by fend into chains her to the remote wall is revealed of tamara…

Overall, the generated text seem to have structure. Nevrtheless, somethimes these sentences do not make a lot of sense. Quoting from Deep Learning with R, page 260:

But, of course, don’t expect to ever generate any meaningfull text, other than by random chance: all you’re doing is sampling data from a statistical model of which characters words come after which characters words.

Adding Temperature Parameter

Actually, in the generate_text function we are not sampling, but rather selecting the word with highest probability. We can relax this by really sampling over the learned distribution. Moreover, we can introduce a parameter, known as temperature \(T\), which spreads it to get more “creative” results. Concretely, let \(x\) be the vector distribution. Consider the transformation

\[ f_{T}(x) = C \exp(\log(x)/T), \]

where \(C>0\) is just a normalization constant. Note that for \(T=1\) we just get the identity transformation. Observe that we can simplify \(f_T\) using the relaton.

\[ \exp(\log(x)/T) = (\exp(\log(x)))^{1/T} = x^{1/T} \]

Let us include these changes into a new text generation function.

def generate_text2(model, tokenizer, seq_len, seed_text, num_gen_words, temperature):

output_text = []

input_text = seed_text

for i in range(num_gen_words):

# Encode input text.

encoded_text = tokenizer.texts_to_sequences([input_text])[0]

# Add if the input tesxt does not have length len_0.

pad_encoded = pad_sequences([encoded_text], maxlen=seq_len, truncating='pre')

# Get learned distribution.

pred_distribution = model.predict(pad_encoded, verbose=0)[0]

# Apply temperature transformation.

new_pred_distribution = np.power(pred_distribution, (1 / temperature))

new_pred_distribution = new_pred_distribution / new_pred_distribution.sum()

# Sample from modified distribution.

choices = range(new_pred_distribution.size)

pred_word_ind = np.random.choice(a=choices, p=new_pred_distribution)

# Convert from numeric to word.

pred_word = tokenizer.index_word[pred_word_ind]

# Attach predicted word.

input_text += ' ' + pred_word

# Append new word to the list.

output_text.append(pred_word)

return ' '.join(output_text)Example 2 - Revisited

seed_text = 'the film starts in a dark house where a group of teenagers friends meet to spend the weekend when they suddenly hear'temperature= \(0.9\)

generated_text = generate_text2(model=model,

tokenizer=tokenizer,

seq_len=seq_len,

seed_text=seed_text,

num_gen_words=80,

temperature=0.9)

print(seed_text + ' ' + generated_text + ' ...')the film starts in a dark house where a group of teenagers friends meet to spend the weekend when they suddenly hear noises the next day owen stabs his seventh when sophie shoots her in the search such he subdues him exist pulls her bloody late eliot then discover that he can do so of kristi so will be letting her that that he had some friends constantly slicing away by confronted by nikki who to the hospital anna is stuck in a switch who grip thomas drains a incident that he strikes justin from frustration and learn it do so that …

temperature= \(0.5\)

generated_text = generate_text2(model=model,

tokenizer=tokenizer,

seq_len=seq_len,

seed_text=seed_text,

num_gen_words=82,

temperature=0.5)

print(seed_text + ' ' + generated_text + ' ...')the film starts in a dark house where a group of teenagers friends meet to spend the weekend when they suddenly hear noises hunter and sarah descend the bodies and history of falburn the disappearance of vampires the killer learns that she did n’t make a large pipe and return to the house and he distracts him dead the man enters the house and kills him on the cellar door by breaking into the house and points to their wounds but carrie runs into town and bearing time taggart becomes convinced that the united states light and convinces to the twelve treatment as well ….

temperature= \(0.1\)

generated_text = generate_text2(model=model,

tokenizer=tokenizer,

seq_len=seq_len,

seed_text=seed_text,

num_gen_words=82,

temperature = 0.1)

print(seed_text + ' ' + generated_text + ' ...')the film starts in a dark house where a group of teenagers friends meet to spend the weekend when they suddenly hear voices he meets the old woman who is bitten by a large swarm of lunges likely of her farm for help he is captured and discovers their way out the operation is not a brief struggle with the parapsychologists in a field tubbs finds stones of the house and the group straps him to the hospital where he is attacked by a skinhead gang garret volunteers to reveal that the two and informs them that the film ends which is why is …

What’s next?

As mentioned in the introduction, this is just the first step in the model iteration. Next iterations can include (among many other things):

- Experiment with different number of neurons per layer.

- Try new network architectures, for example adding a 1D-convnet layer.

- Train on a bigger data set.

Of course, training on GPU would allow us to iterate faster.